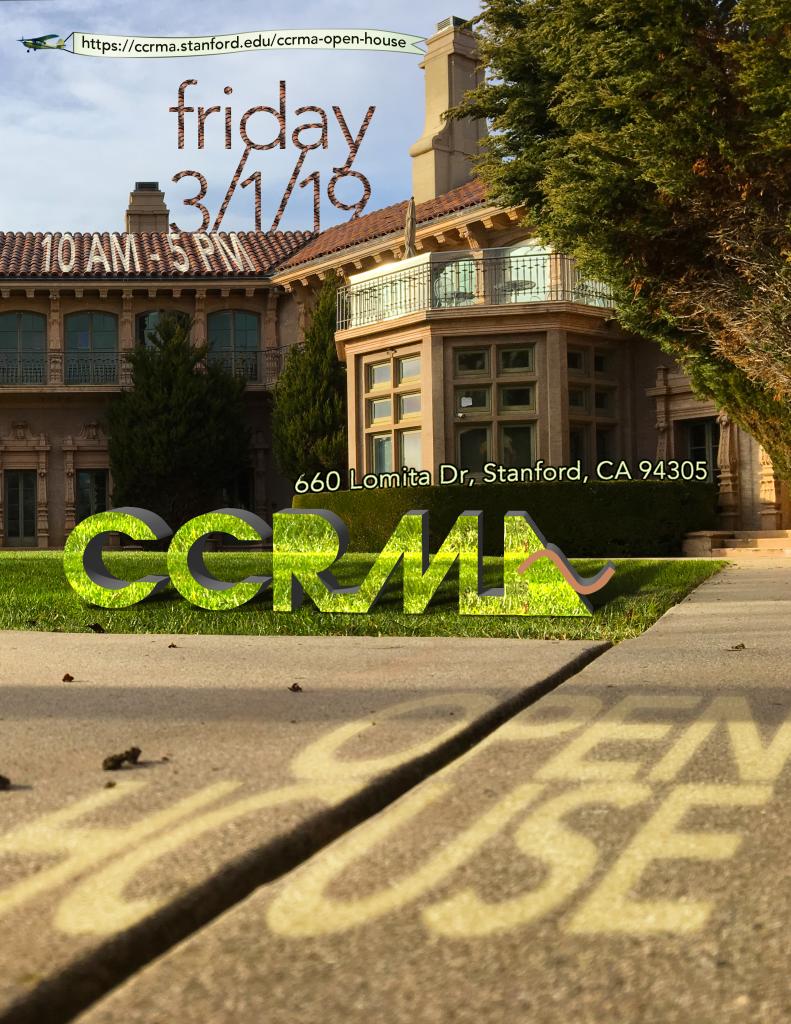

CCRMA Open House 2019

Poster by Constantin Basica and Simona Fitcal

On Friday March 1, 2019, we invite you to come see what we've been doing up at the Knoll!

Join us for lectures, hands-on demonstrations, posters, installations, and musical performances of recent CCRMA research including digital signal processing, data-driven research in music cognition, and a musical instrument petting zoo.

Past CCRMA Open House websites: 2008, 2009, 2010, 2012, 2013, 2016, 2017, 2018.

Facilities on display

Neuromusic Lab: EEG, motion capture, brain science...Listening Room: multi-channel surround sound research

Max Lab: maker / physical computing / fabrication / digital musical instrument design

Hands-on CCRMA history museum

JOS Lab: Isomorphic keyboards: LinnStrument and GeoShred

CCRMA Stage: music and lectures

Studios D+E: Sound installations

Recording Studio: Sound installation and studio tours

Schedule for the Stage

Lectures

10:20-10:40 Scott Oshiro - Networked Music Performance and Cross-Cultural Exchange

10:40-11:00 Jaehoon Choi, Noah Fram, Jonathan Berger - Jazz MLU Research Project

11:00-11:20 Ge Wang - Artful Design: Technology in Search of the Sublime!

11:20-11:40 Elliot K. Canfield-Dafilou, Eoin F. Callery, Jonathan S. Abel, Jonathan J. Berger - A Method for Studying Interactions between Music Performance and Rooms with Real-Time Virtual Acoustics

11:40-12:00 Fernando Lopez-Lezcano - SpHEAR project update

[lunch break]

1:40-2:00 Nick Porcaro, Julius Smith, Pat Scandalis - Adding AUv3 plugin support to a standalone iOS app

2:00-2:20 Romain Michon - What's New With Faust?

2:20-2:40 Gregory Pat Scandalis, Julius Smith - Running Faust Algorithms on a SHARC Audio Module

Concert (2:45-4:15)

Jordan Rudess Exploring Deep Sampling with Analog Synthesis

Scott Oshiro CYPHER

Alkimiya Xfer (Barbara Nerness and Stephanie Sherriff) 417

Jaehoon Choi Guitar Improvisation with ChucK

Constantin Basica, Teodor Basica (lyrics) Chatbots

Fernando Lopez-Lezcano Pia[NOT] Etude

Douglas McCausland {IMP}ulse

Hassan Estakhrian Corivade

Exhibits (lectures, performances, demos, posters...) More will be added in the coming weeks.

String exciter glove

Patricia Alessandrini, Konstantin Leonenko (Goldsmiths)

This instrument was designed over the past year at Goldsmiths as an extension of the 'piano machine' project, which consists of a set of 'fingers' excited by small vibration motors and controlled by a midi keyboard or sensors in performance. This is a glove version to be used on the harp, piano or other string instruments directly by a performer, with the degree of voltage controlled by a pedal. It was first used in performance on the harp by Jutta Troch of the Tiptoe Company in the Transit Festival 2018 (Belgium), and was recently performed on the piano by Julie Herndon as part of the fff collective. See more details at http://patriciaalessandrini.com/pianomachine

Pettable Instrument. Location: MaxLab (201), Time: all day

12 Sentiments for VR

Jack Atherton, Part of the CCRMA VR Lab demos

12 Sentiments for VR is an exploration of the emotional life cycle of a plant. Grow, catch sunlight, spawn seedlings, travel on the wind in search of a new home, be lost, be found, move, and be still. Through 12 sentiments of longing, exhilaration, mourning, serenity, frenzy, the sublime, and more, take some time to focus a little more on being rather than doing. Created as part of the new CCRMA VR/AR lab.

Demo - VR. Location: VR Wonderland (211), Time: all day

Chatbots

Constantin Basica, Teodor Basica (lyrics)

Constantin Basica performs a revised sketch from his opera "Knot an Opera!". The lyrics have been assembled from several of his brother’s poems and translated from Romanian with Google Translate, with all the quirks specific to the system.

Video documentation of the entire opera can be found here: http://knot-an-opera.constantinbasica.com

Live Musical Performance. Location: Stage (317), Time: Concert (2:45-4:15) piece 5/8

Recent CCRMA Studio Modifications

Eoin Callery, Elena Georgieva

Eoin will be on hand between 13.00 and 15.00 to talk about recent studio upgrades and ongoing remodeling

Poster/Demo. Location: Control Room (127), Time: 1:00-3:00

A Method for Studying Interactions between Music Performance and Rooms with Real-Time Virtual Acoustics

Elliot K. Canfield-Dafilou, Eoin F. Callery, Jonathan S. Abel, Jonathan J. Berger

An experimental methodology for studying the interplay between music composition and performance and room acoustics is proposed, and a system for conducting such experiments is described. Separate auralization and recording subsystems present live, variable virtual acoustics in a studio recording setting, while capturing individual dry tracks from each ensemble member for later analysis. As an example application, acoustics measurements of the Chiesa di Sant'Aniceto in Rome were used to study how reverberation time modifications affect the performance of a piece for four voices and organ likely composed for the space. Performance details, including note onset times and pitch tracks, are clearly evident in the recordings. Two example performance features are presented illustrating the reverberation time impact on this musical material. To participate in a live demonstration, visit the accompanying installation Church Rock: Reviving the Lost Voices of Architectural Spaces.

15-minute Presentation/Lecture. Location: Stage (317), Time: 11:20-11:40

Church Rock: Reviving the Lost Voices of Architectural Spaces

Elliot K. Canfield-Dafilou, Eoin F. Callery, Jonathan S. Abel, Talya Berger, Jonathan Berger, Stephen Sano, Stanford Chamber Chorale

A virtual choir---the Stanford Chamber Chorale---will perform music by Felice Anerio (1560--1614) in a real-time virtual acoustic environment using loudspeakers and room microphones. Come ``join the choir'' and sing along in a simulation of Chiesa di Sant'Aniceto from Rome's Palazzo Altemps and other virtual acoustic spaces. To learn more about the project, come to the accompanying lecture A Method for Studying Interactions between Music Performance and Rooms with Real-Time Virtual Acoustics.

Installation. Location: Recording Studio (124), Time: 10:00-12:00 and 1:00-3:00

Pipeline

Doga Cavdir

Pipeline is a custom-made brass pan flute. It is a manually machined acoustic instrument with air-tight brass tubes, a custom-tuned scale, and a curved geometry. See more detailed documentation at: http://www.dogacavdir.com/pipeline-si5x

Pettable Instrument. Location: MaxLab (201), Time: all day

Jacktrip on Raspberry Pi

Chris Chafe, Scott Oshiro

The jacktrip application for wide area network music performance has been ported to Raspberry Pi. The present setup runs Fedora 29 with the xfce desktop on a Model 3 B+ in conjunction with standard, low-cost stereo USB soundcards. We describe all the steps from initial OS installation through building and running jacktrip.

Demo. Location: Studio E (320), Time: all day

InterFACE: New Faces for Musical Expression

Deepak Chandran

InterFACE is an interactive system for musical creation, mediated primarily through the user’s facial expressions and movements. It aims to take advantage of the expressive capabilities of the human face to create music in a way that is both expressive and whimsical. We present the design behind these instruments and consider what it means to be able to create music with one’s face. For more info: https://ccrma.stanford.edu/~deepak/projects/interface/

Poster/Demo. Location: Ballroom (216), Time: all day

thARemin

Deepak Chandran

A virtual theremin-esque "instrument" on iOS

Demo. Location: Ballroom (216), Time:

Guitar Improvisation with ChucK

Jaehoon Choi

This project is an experimentation of improvised performance with both harmony and textural sound. For the performance, The performance was a improvisation based on Jazz standard 'Misty' with ChucK. The ChucK code does real-time sampling every 6 seconds and randomnly changes the rate to either 2, 0.5, or 1. The performer respond to the randomly sampled sound while performing the tune. This project is still in process and planned to be further developed in the future. This was performed in 8 channels.

(https://ccrma.stanford.edu/~j3819443/portfolio/misty.html)

Live Musical Performance. Location: Stage (317), Time: Concert (2:45-4:15) piece 4/8

Jazz MLU Research Project

Jaehoon Choi, Noah Fram, Jonathan Berger

Jazzomat database provides a large amount of jazz solo transcriptions in various formats with its metadata. Researchers of the Jazzomat team labeled short snippets of jazz solo into 9 categories called Mid-Level Units(MLU). Using computational analysis to this dataset, we are trying to find interesting musical observations of Jazz vocabulary throughout its history. In this presentation, I would like to discuss our current state and our future plan for this research project.

15-minute Presentation/Lecture. Location: Stage (317), Time: 10:40-11:00

NewMixer

Jatin Chowdhury

NewMixer is a non-traditional mixing tool, meant to break free of the typical "virtual mixing board" mixing tools present in most modern Digital Audio Workstations. Rather than representing audio tracks as channel strips on a hardware mixer, NewMixer visualizes tracks as sound sources in space. This visualization allows for more intuitive controls over basic mixing functions such as panning, reverberance, and gain, while also giving the user visual cues to help them achieve the width and depth they desire in their mix.

Pettable Instrument. Location: MaxLab (201), Time: all day

Web Audio Module (WAM) Distortion

Jatin Chowdhury

Web Audio Modules (WAMs) are similar to VST plugins for your web browser, and have been growing in popularity along with advancements in the Web Audio and Web MIDI APIs. WAM Distortion is a proof of concept for porting VST plugins to WAMs. Using the Emscripten compiler it is possible to compile C/C++ code into Web Assmembly (WASM), and then from WASM to Javascript. To test this process, I took a VST distortion plugin made in C++, compiled it into WASM, and wrapped it into a Web Audio AudioProcessorNode in JavaScript.

Pettable Instrument. Location: MaxLab (201), Time: all day

Foot Piano

Marina Cottrell, Part of the CCRMA VR Lab demos

Foot Piano is a giant piano to be played by your feet. Inspired by the piano in the movie "Big" (starring Tom Hanks), it uses Vive trackers strapped to your feet to see where your feet are at all times. Play songs by jumping or running around the keyboard and even get some exercise while you're at it! Three different gameplay modes allow you to play pre-loaded songs in a Guitar Hero-like game, play your own songs at your own pace in "freeplay" and even shoot spaceships in the space invader mode. Created as part of the new CCRMA VR/AR lab.

Demo - VR. Location: VR Wonderland (211), Time: all day

Demonstration of EEG Capping Procedure

Tysen Dauer, Emily Graber, Irán R. Román

In the Neuromusic Lab, neural activity is recorded by electroencephalography (EEG). This short video shows the preparation that must be done before EEG data is gathered. This includes fitting a cap, impedance matching each electrode, and running the data acquisition system.

Video Demonstration (~4 minute loop). Location: Neuromusic Lab (103), Time: all day

Corivade

Hassan Estakhrian

Corivade is a structured-improvised piece for voice, electric bass, and electronics with secret sensors manipulating the audio in real-time. It is dispersed to 8 channels and then diffused to ambisonics (when applicable). antennafuzz.com

Live Musical Performance. Location: Stage (317), Time: Concert (2:45-4:15) piece 8/8

VASHE: Physically Based Sound (and Haptics) for VR

Charles Foster, Part of the CCRMA VR Lab demos

Interactive sound and haptics present unique needs, not natively supported by the game engines on which virtual worlds are typically built. The Virtual Acoustic Sound and Haptics Engine (VASHE) project from the CCRMA VR Lab aims to design a free, open-source pipeline for real-time, physically-based sound synthesis in V/AR. Among the core values of VASHE is to enable true virtual audio-haptic play (tapping, striking, scraping, throwing, etc). Previous research and video demos are presented, as well as a brief interactive demo. See https://ccrma.stanford.edu/~cfoster0/VASHE/ for more info and to stay in the loop of future development.

Demo - VR. Location: VR Wonderland (211), Time: all day

Why You Should Practice in a Dead Room

Toby Frager, Hannah Cussen, Leon Bi

Perhaps the worst part of practicing is hearing our mistakes. But the process of self-examination is important. The acoustics of our practice spaces contribute to the ability to hear ourselves play, and hence to our ability to improve. This investigation reveals the advantages of practicing in dead rooms as opposed to live ones, which forces us to be responsible for what we play. This investigation highlights starkly some of the phenomena that occur when we change the acoustics of the room and tells us why we ought to practice in spaces which make us sound bad.

Poster/Demo. Location: Grad Workspace (305), Time: all day

Surprisal, Liking, and Musical Affect

Noah Fram

Formulation and processing of expectation has long been viewed as an essential component of the emotional, psychological, and neurological response to musical events. There are multiple theories of musical expectation, ranging from a broad association between expectation violation and musical affect to precise descriptions of neurocognitive networks that contribute to the perception of surprising stimuli. In this paper, a probabilistic model of musical expectation that relies on the recursive updating of listeners' conditional predictions of future events in the musical stream is proposed. This model is defined in terms of cross-entropy, or information content given a prior model. A probabilistic program implementing some aspects of this model with melodies from Bach chorales is shown to support the hypothesized connection between the evolution of surprisal through a piece and affective arousal, indexed by the spread of possible deviations from the expected play count.

Poster/Demo. Location: Ballroom (216), Time: all day

Stimuli Jukebox

Takako Fujioka, Keith Cross, Tysen Dauer, Elena Georgieva, Emily Graber, Madeline Huberth, Kunwoo Kim, Michael Mariscal, Barbara Nerness, Trang Nguyen, Irán Román, Ben Strauber, Auriel Washburn

Many experiments happen in the Neuromusic Lab each year, each involving some kind of musical stimuli (sounds that the subjects listen to) and/or musical task (music that the experiment asks the subjects to perform). Throughout the day we will play an assortment of such sounds, to give some of the sonic flavor of the experiments that take place here.

Sounds to Hear. Location: Neuromusic Lab (103), Time: all day

HFM-1MB - HyperAugmented FlameGuitar (Flamenco Guitar) for 1MB (One Man Band)

Carlos A. Sánchez García-Saavedra

Towards a HyperAugmented Flamenco Guitar for a One Man Band. Meta-Project and Concept that naturally encompasses a plethora of musical, engineering, tech, devel, and building projects developed through a lifetime. The goal is achieve a series of tools (software/hardware/electronics), methodologies, processes and helpers that allow a person with a guitar to be a One Man Band, and trying to minimize the technical thinking in favor of musical flow, performance and creative thinking. "More Music (playing and creating), Less Tech (clicking, typing, touching)". Portability and Lightness, Ease and Simplicity, Freedom and Openness, Privacy and Security, Cheap and portable, DIY.

Poster/Demo. Location: Carlos Office (207), Time: all day

HitPredict: Predicting Billboard Hits Using Spotify Data

Elena Georgieva, Marcella Suta, Nicholas Burton

The Billboard Hot 100 Chart remains one of the definitive ways to measure the success of a popular song. In this project, we used audio features from the Spotify Web API and machine learning techniques to predict which songs will become Billboard hits. We are able to predict the Billboard success of a song with ~75% accuracy using machine-learning algorithms, most successfully Logistic Regression and a Neural Network. Learn more: https://ccrma.stanford.edu/~egeorgie/HitPredict/hitpredict.html

Poster/Demo. Location: Grad Workspace (305), Time: all day

SeekFreek

Benjamin Josie

SeekFreek is a MIDI Sequencer built on the Raspberry Pi. The circular design is a way to rethink the way we sequence MIDI notes.

See the full project documentation at: http://benjaminjosie.com/seekfreek

Pettable Instrument. Location: MaxLab (201), Time: all day

ToeCandy

Benjamin Josie

ToeCandy is a MIDI expression pedal built using silicone pads, force sensors and a teensy microcontroller. It allows the user to utilize MIDI expression with their feet in three dimensions. Each silicone pad has two sensors, allowing the user to apply weight laterally to each pad, or vertically across the two.

Full project documentation can be found at http://benjaminjosie.com/ToeCandy

Pettable Instrument. Location: MaxLab (201), Time: all day

Side Effects (2017)

Jaroslaw Kapuscinski, Kacper Kowalski

Side Effects' started as a photographic documentary by Kacper Kowalski that was shown in exhibitions and was published as a book. It features complex relationships between people and nature as seen from 150 meters above ground. The estranged perspective revealed unexpected metaphoric and structural dimensions that inspired Jaroslaw Kapuscinski to propose an audiovisual collaboration. Some of the aerial shots existed as video footage offering natural space-time for musical interpretation. The two artist collaborated closely on video editing and musical composition to offer a personal guided experience of 10 locations in the north of Poland. Each movement is titled by the geographic coordinates of the shown place. The work was commissioned by Spoleto USA Festival in 2017. The piano part in this video was performed by Jenny Q Chai. Side Effects will be on display at CCRMA through March.

Audiovisual Exhibition. Location: Lobby (116), Time: 4:30 and beyond

November 20th

Kimberly Kawczinski

This four-part interactive installation describes the soundscape of San Francisco on November 20th, 2018. Each scene slows the busy city into captures of sonic moments unique to San Francisco. Explore the spaces by contributing your own sounds, and experience how the soundscape responds to reveal faces of the city on this day that may have otherwise passed you by.

Interactive Installation. Location: Studio D (221), Time: all day

The Fisherman

Kunwoo Kim, Part of the CCRMA VR Lab demos

The Fisherman is an interactive audiovisual last-will in the medium of virtual reality, in which you fish perspectives of human qualities such as money, time, and empathy. Fishing each of them allows you to enter a scene that abstractly represents it, from conveyor-belt restaurants, a tower of metamorphic shadow puppet shows, to rainy internal bodies. The scenes progress as you interact with various objects and characters with corresponding real-time audiovisual feedback. After you explore all the perspectives, you fish yourself for the last time, and realize an important truth. The Fisherman is a project from CCRMA VR/AR lab.

Demo - VR. Location: VR Wonderland (211), Time: all day

Sonifying Incarceration Rates of France, United Kingdom, Iceland, and United States (from 2000-2016)

Gaby Li

The goal of this project was to sonify the incarceration rates of the four countries France, United Kingdom, Iceland, and the United States (from 2000-2016) in a way that humanizes prisoner statistics and leaves a more resounding impression on the listener. I inversely related the volumes of a song written by a prisoner and the sound of chains rattling in order to emphasize the correlation between higher incarceration rates and a country’s mentality to keep prisoners in and away from their family and society.

Poster/Demo. Location: Data Aesthetics Wonderland (303), Time: all day

SpHEAR project update

Fernando Lopez-Lezcano

The SpHEAR (Spherical Harmonics Ear) project's aim is to create completely open designs for DIY Ambisonics microphones, including 3D printable CAD models, electronic circuits and PCB designs, and the software needed for measuring and calibrating the microphones. First and second order microphones with up to eight electret capsules are currently part of the project.

15-minute Presentation/Lecture. Location: Stage (317), Time: 11:40-12:00

Pia[NOT] Etude

Fernando Lopez-Lezcano

When is a piano not a piano any longer? Does it need physical keys? Does it want a respectable tuning? How about a nicely finished veneer? Working dampers? We postulate that none of those are really needed. This short Etude explores some the sounds and resonances of one of the piano skeletons recorded as part of the Weathered Pianos project and "tuned" over a year of exposure to California weather patterns. This big database of sounds is explored by SuperCollider instruments and algorithms. These recordings of all kinds of percussion sounds were captured in our Recording Studio using first and second order Ambisonics microphones created by the composer as part of the SpHEAR project.

Musical Performance. Location: Stage (317), Time: Concert (2:45-4:15) piece 6/8

{IMP}ulse

Douglas McCausland

“IMP{ulse}” is a piece for guitar and live electronics originally written as one of a number of proof-of-concept works while I was composing and creating the tech for my composition “Black Amnesia”. In this short piece I am testing a real-time analysis system which is being implemented in order to derive digital synthesis behaviors directly from the spectral / temporal content of an input signal. Musically, the compositional goal here was to explore gesture, timbre, and dynamics, as a method of control for the system. The result of this interaction is a short but chaotic piece which harshly juxtaposes different timbral materials in fragmentary bursts both from the acoustic and electronic voices.

Live Musical Performance. Location: Stage (317), Time: Concert (2:45-4:15) piece 7/8

What's New With Faust?

Romain Michon

Faust (https://faust.grame.fr) is a functional programming language for real-time audio signal processing. Its development is very active and new features keep being added! During this short presentation, we'll give an overview of what's new in the world of Faust. We'll also talk about ongoing developments and future directions.

15-minute Presentation/Lecture. Location: Stage (317), Time: 2:00-2:20

Bare-Metal DSP on the Teensy and the Raspberry Pi With Faust

Romain Michon

We present a series of tools to use the Teensy and the Rasbperry Pi to carry out bare-metal low latency real-time audio signal processing using the Faust programming language.

Pettable Instrument. Location: MaxLab (201), Time: all day

Digital Ghosts

Trijeet Mukhopadhyay

Digital Ghosts is an interactive installation piece which illuminates the interconnected digital world we live in through an audiovisual rendition of network data flow around it. By making the invisible visible, the installation invites you to discover the very actively buzzing digital ecosystem that is flying around us.

Is this a miracle of modern technology, or have we gone too far and we're stuck in too deep?

Installation. Location: Lounge (313), Time: all day

417

Alkimiya Xfer (Barbara Nerness and Stephanie Sherriff)

Alkimiya Xfer is an ambient noise duo consisting of Barbara Nerness and Stephanie Sherriff. Through the incorporation of live streamed police scanner communications, their composition, 417, attempts to create a sonic experience structured around the observation of real-time events in which they explore themes of surveillance and darkness with electronic and analog sounds. Using 5th order ambisonics and custom interfaces, the duo creates immersive soundscapes illuminating the myriad technologies that are listening to and watching us. 417 is the Alameda County police code for “Disturbance”.

Live Musical Performance. Location: Stage (317), Time: Concert (2:45-4:15) piece 3/8

Doublevision

Barbara Nerness

Doublevision is a video installation that turns audio and movement into visuals. The visuals are created using only audio signals by exploiting old CRT television technology. The work aims to remove the boundary between audio and video as physical media, and highlight the perceptual distinction. Ambient sounds and movements affect the visuals in real time, hence the name Doublevision.

The horizontal plane in front of the screen has been mapped to 16 different color patterns, which you can activate with your movement. If you make sounds, they will increase the brightness of the visuals. Have fun!

Installation. Location: Lobby (116), Time: 10:00-4:00

VocaLoop: A Real-Time Voice2midi Looper

Camille Noufi

VocaLoop is a real-time, voice-based interface to capture and retain musical thoughts. The program provides users a platform to easily sing, layer and play back melodic phrases and drum beats that are quantized in both pitch and time. The interface provides flexibility in the length and tempo of the loop, as well as the timbre/instrument of each recorded layer during real-time playback. It is a fun solution to the lack of mechanisms that can digitally transcribe a polyphonic musical idea simply using the voice.

Pettable Instrument. Location: MaxLab (201), Time: all day

Networked Music Performance and Cross-Cultural Exchange

Scott Oshiro

This talk will discuss the technical challenges, psycho-perceptual elements and the music interactions that emerge in Networked Music Performances (NMP). The final performance of MUSIC 153 (Online Jamming) will be analyzed identifying these elements through the use of Music Information Retrieval (MIR) techniques. We will also discuss how recent advancements in network and communication technologies may integrate with NMP, and why analyzing musical interactions over the network on the basis of genre, culture, and personal experience is critical.

15-minute Presentation/Lecture. Location: Stage (317), Time: 10:20-10:40

CYPHER

Scott Oshiro

CCRMA’s MUSIC 153 (Online Jamming) course participated in a Networked Music Performance (NMP) connecting musicians from CCRMA, University of California Riverside, and Tromso, Norway. The musicians were Scott Oshiro – Flute & Alto Flute, Chris Chafe – Cello, Shuxin Meng – Pipa, Mike Phia Vang – Violin, Glen Langdon - Piano, Geir Davidsen – Euphonium, Ethan Castro - Synth & Voice. The performance was a proof of concept, experimenting with different performance methods and approaches for NMP. We performed 3 different compositions, each with a different musical focus. These included a Nordic Chant 'Predicasti Dei Care', Steve Reich’s 'Clapping Music', and an open improvisation that experimented with altering/changing the network connection between the musicians during the performance. To create CYPHER, two samples from this performance were layered and played back at various speeds and directions using the CHUCK programming language.

Remix of Pre-recorded Network Improvisation. Location: Stage (317), Time: Concert (2:45-4:15) piece 2/8

Adding AUv3 plugin support to a standalone iOS app

Nick Porcaro, Julius Smith, Pat Scandalis

Apple Audio Unit Version 3 (AUv3) App Extensions are a robust audio plugin format that can shield users from rogue or unstable plugins. But the cost of this robustness can be large to plugin developers. This talk will cover the challenges encountered when adding AUv3 support to GeoShred.

15 minute Presentation/lecture. Location: Stage (317), Time: 1:40-2:00

Measurements of the Voicing Process of Acoustic Guitar Tops

Mark Rau

Measurements of two acoustic guitar top plates were taken during the brace carving process, often referred to as "voicing the top". The change in modal structure of the guitar tops was analyzed to gain insight into the carving choices made by the luthiers, and to confirm their goal of making the guitar tops more resonant.

Poster/Demo. Location: Ballroom (216), Time: 43740

Brain Data Analysis with the Open Source MNE Python Toolbox

Irán R. Román

MNE is a free and powerful toolbox to analyze time-series brain data. In this demo we will cover the basic operations to carry out an Event-Related Potential (ERP) analysis on EEG data when people listen musical chords.

Demo. Location: Stage (317), Time: 10:00-10:20

Exploring Deep Sampling with Analog Synthesis

Jordan Rudess

Jordan Rudess (Dream Theater) explores deep sampling and analog synthesis on the Prophet X synthesizer keyboard along with an iPad performance of iOS GeoShred instrument's control of the amazing granular-based Spacecraft application.

Live Musical Performance. Location: Stage (317), Time: Concert (2:45-4:15) piece 1/8

Running Faust Algorithms on a SHARC Audio Module

Gregory Pat Scandalis, Julius Smith

Faust is a functional programming language for audio signal processing with support for sound synthesis. Faust code can be compiled and deployed for many different targets via a wrapping system called an "Architecture". An Architecture has been developed to migrate compiled Faust code to the SHARC Audio Module developer board. As an example, a classic analog synthesizer written in Faust, and effects chain can be efficiently run on the SHARC Audio Module. This presentation will give a brief overview of Faust and how Faust can be targeted to run on a SHARC Audio Module.

15-minute Presentation/Lecture. Location: Stage (317), Time: 2:20-2:40

Modal Synthesis of a Drumset

Travis Skare

A demo station with a selection of physically-modeled cymbals, toms, snare drums, bass drums, and cowbells. Instruments are modeled using modal synthesis using a filterbank of high-Q phasor filters. Performance controls allow for effects such as time stretching, pitch shifting, exciters, and envelope control.

As having a high number of modes per instrument, or a high number of instruments in an ensemble, stresses CPU resources, we investigate running the filterbank on a GPU.

Demo. Location: Ballroom (216), Time: all day

JOS Lab: Isomorphic Jam, Far Field Synthesis, DSP Research

Julius Smith

Time to make some noise in JOS Lab (Knoll 306). Two isomorphic keyboards will be on display: a LinnStrument donated to CCRMA by Roger Linn, and GeoShred running on an iPad. Additionally, the eight-channel Meyer array will be set up for listening to sampled plane-wave synthesis for a movable noise source, which is equivalent to wave field synthesis when the source is far away. Finally, various research project descriptions will be hanging on the walls as conversation starters. JOS plans to be there when there is no scheduled conflict, including after all scheduled events. Come jam!

Demo / Discussion / Jam. Location: JOS Lab (306), Time: TBD and later

Audio2Images - Generating Images from Audio

Ting-Wei Su, Chih Wen Lin

We took the state-of-the-art audio signal encoder and deep generative model to achieve images from sounds. Our model, Audio2Img, is trained and evaluated on audio and images from a subset of AudioSet. This work shows preliminary results of generating images based on audio data and points out a need for higher quality audio-visual dataset to bridge the gap between audio and visual learning. (For more information: https://nips2018creativity.github.io/doc/Generating_Images_from_Audio.pdf )

Poster/Demo. Location: Ballroom (216), Time: 1:30-3:00

Global Conflict Observatory

Dawson Verley

When the first high-resolution satellite images of earth at night were published, development and democracy became visible. While South Korea, for example, is blanketed by lights, North Korea is starkly dim. As it turns out, nighttime lights tell a similar story about conflict. Over time, we can watch cities like Aleppo in Syria and Hodeida in Yemen as their footprints expand and contract under the burdens of war. The Global Conflict Observatory is an interactive audiovisual map that allows users to explore satellite data that reflects the human cost of wars around the world.

Demo. Location: Data Aesthetics Wonderland (303), Time:

Artful Design: Technology in Search of the Sublime!

Ge Wang

What is the nature of design, and the meaning it holds in human life? What does it mean to design well? To design ethically? How can the shaping of technology reflect our values as human beings? Drawing from Ge’s new book, Artful Design: Technology in Search of the Sublime, this talk will examine everyday examples of design – computer music instruments, tools, toys, and social experiences — to consider how we shape technology and the ways in which technology, in turn, shapes our society and ourselves.

15-minute Presentation/Lecture. Location: Stage (317), Time: 11:00-11:20

Artful Design: Technology in Search of the Sublime!

Ge Wang

What is the nature of design, and the meaning it holds in human life? What does it mean to design well? To design ethically? How can the shaping of technology reflect our values as human beings? Drawing from Ge’s new book, Artful Design: Technology in Search of the Sublime, this introduces artful design through everyday examples of design – computer music instruments, tools, toys, and social experiences — to consider how we shape technology and the ways in which technology, in turn, shapes our society and ourselves.

Poster. Location: Ballroom (216), Time: all day

CCRMA Virtual Reality Lab Demo

CCRMA VR / AR Lab:, Ge Wang, Charles Foster, Jack Atherton, Kunwoo Kim, Marina Cottrell

The VR Lab will show VR and related works

Demo. Location: VR Wonderland (211), Time: all day

SeqBattle - Defend Your Music

Jingjie Zhang

SeqBattle is a hexagonal step sequencer and a chess-like board game. Two players take turns to place their pieces on the hex board. The pieces will not only trigger the sequencer but also cause damage to adjacent enemy pieces. To play this game, one should combine musical motifs with strategies. Protect your pieces, defend your music.

For more information, please visit: https://ccrma.stanford.edu/~jingjiez/256a/hw2/index.html

Demo. Location: Classroom (217), Time: all day