Research Projects

I do research in human-centered music information retrieval-- combining music, machine learning, and cognition. For my PhD, I am advised by Dr. Brian McFee and Dr. Pablo Ripollés. I have collaborated with researchers at NYU, Stanford, and UCLA, and audio technology companies including Univeral Audio, Spotify, Smule, and Shazam. I have a GitHub page. I have several research publications and a Google Scholar profile.

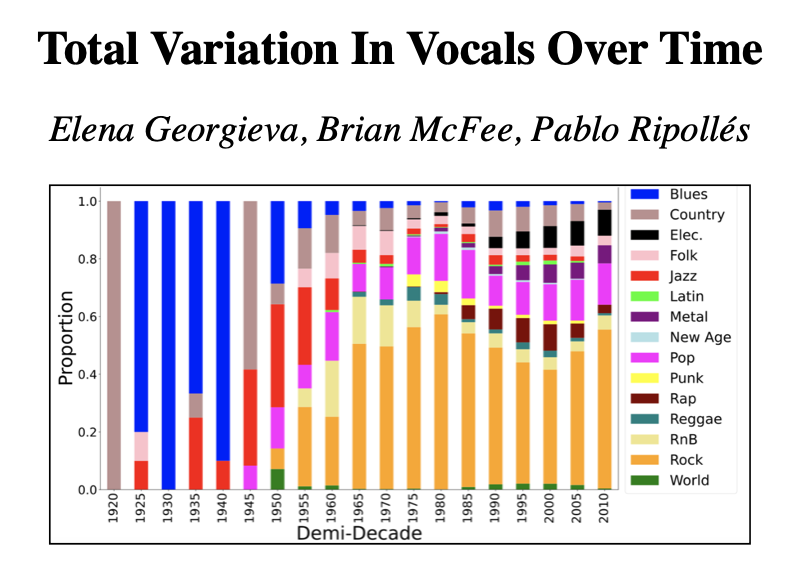

Total Variation in Vocals Over Time

We conduct an exploratory study of 43,153 vocal tracks of popular songs spanning nearly a century, and report trends in total variation over time and between genres.

HitPredict: Using Spotify Data to Predict Billboard Hits

We are able to predict the Billboard success of a song with ~75% accuracy using several machine learning algorithms.

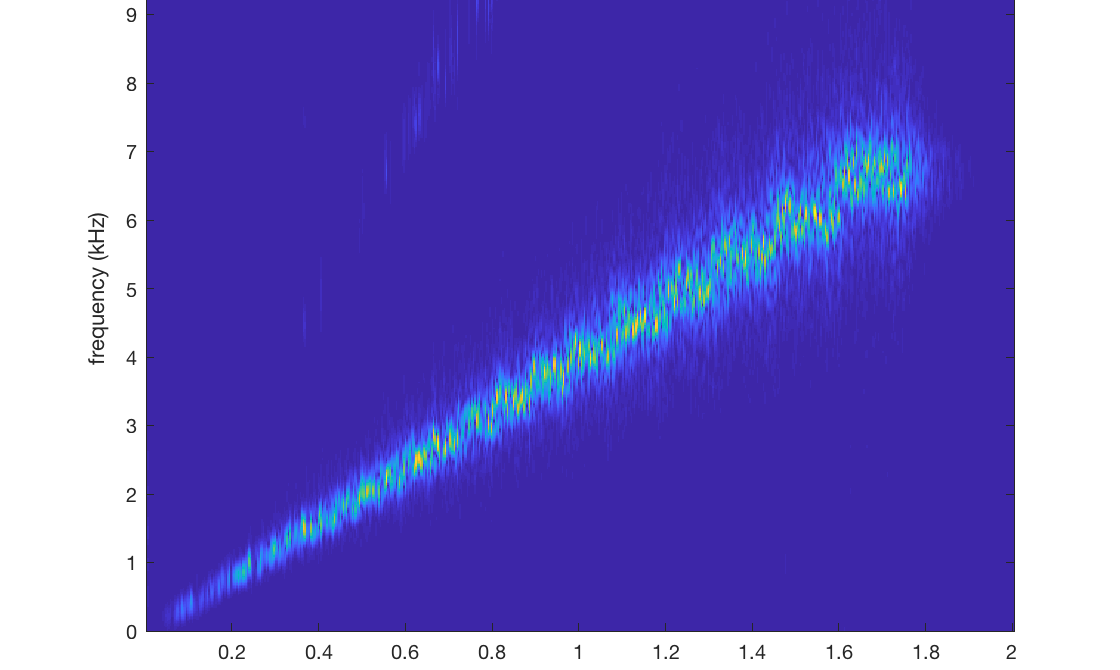

Music Visualizer

A music visualizer I built for my Music, Computing, and Design course with Ge Wang at Stanford.

Instrument Design for a Laptop Opera

I worked with composer Anne Hege and a subset of the Stanford Laptop Orchestra on The Furies: A new LaptOpera.

Vocal Expression

My ISMIR 2020 late-breaking demo paper: An Evaluation Tool for Subjective Evaluation of Amateur Vocal Performances of “Amazing Grace.”

Cochlear Implant Listening

Familiarity, quality and preference in Cochlear Implant Listening.

Research Publications

E. Georgieva, P. Ripollés, and B. McFee (2023). Total Variation in Vocals Over Time. Poster presentation at the Demonstration Session of IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA). New Paltz, NY, USA.

A. Hege, C. Noufi, E. Georgieva, & G. Wang (2021). Instrument Design for The Furies: A LaptOpera. In New Interfaces for Musical Expression (NIME). Virtual.

E. Georgieva, C. Noufi, V. Rangasayee, B. Kaneshiro, J. Berger (2020). An Evaluation Tool for Subjective Evaluation of Amateur Vocal Performances of “Amazing Grace”. In Extended Abstracts for the Late-Breaking Demo Session of the 21st International Society for Music Information Retrieval Conference (ISMIR). Virtual.

E. Georgieva, M. Suta, N. Burton (2020). HitPredict: Using Spotify Data to Predict Billboard Hits. Machine Learning for Media Discovery Workshop at the International Conference on Machine Learning (ICML). Virtual.

B. Kaneshiro, B. Frisbie, E. Georgieva, and D. P. W. Ellis (2019). Characterizing Musical Correlates of Large-Scale Discovery Behavior. Machine Learning for Music Discovery Workshop at the International Conference on Machine Learning (ICML). Long Beach, USA.

E. Georgieva, M. Suta, and N. Burton (2018). HitPredict: Predicting Hit Songs Using Spotify Data. Poster presentation and formal writeup at the Stanford CS 229: Machine Learning Poster Session. Stanford, USA.

E. Georgieva and B. Kaneshiro (2018). Impact of Familiarity on Music Preference During Simulated Cochlear-Implant Listening. In Extended Abstracts for the Late-Breaking Demo Session of the 19th International Society for Music Information Retrieval Conference (ISMIR). Paris, France.

Acknowledgements

C. Noufi, V. Rangasayee, S. Ciresi, J. Berger, and B. Kaneshiro (2019). A Model-Driven Exploration of Accent Within the Amateur Singing Voice. Machine Learning for Music Discovery Workshop at the International Conference on Machine Learning (ICML), Long Beach, USA

E. Canfield-Dafilou, et al (2019). A Method for Studying Interactions between Music Performance and Rooms with Real-Time Virtual Acoustics. Audio Engineering Society Convention 146. Audio Engineering Society.

Invited Talks and Posters

Total Variation in Vocals Over Time (2023). Invited Talk, Chicago Audio Engineering Society (AES) Section. Virtual.

The Changing Sound of Music: An Exploratory Corpus Study of Vocal Trends Over Time (2023). Poster presentation at the Speech and Audio in the Northeast (SANE) Workshop. Brooklyn, NY, U.S.A.

Total Variation in Vocals Over Time (2023). Invited Talk, Stanford CCRMA Workshop for Deep Learning for Music Information Retrieval. Virtual.

Music Information Retrieval and Web APIs (2023). Guest Lecture, University of Alaska Anchorage Dept. of Computer Science & Engineering. Virtual.

Music Technology and Hit Songs (2022). Guest Lecture, New York University Music Technology Undergraduate Collegium. New York, N.Y., U.S.A.

Music Information Retrieval and Hit Songs (2022). Guest lecture, Barnard College Computational Sound Course/ Columbia University Computer Music Center (CMC) Computer Science Seminar. New York, N.Y., U.S.A.

Vocal Recording, Production and Research (2021). Guest lecture, Stanford University Music 192: Sound Recording. Virtual.

Vocal Recording, Production and Research (2020). Guest lecture, Stanford University Music 192: Sound Recording. Virtual.

HitPredict: Using Spotify Data to Predict Billboard Hits (2019). Berkeley Synthesis Conference: Interdisciplinary Collaboration in Computational Music Research. UC Berkeley, Berkeley, USA.

Women in Music Information Retrieval(2019). Invited informational talk, Bay Innovative Signal Hackers Bash (BISH Bash) Meetup. Dolby Labs, San Francisco, USA.

Music Recommendation Systems for Listeners with Hearing Loss (2019). Invited talk, Bay Innovative Signal Hackers Bash (BISH Bash) Meetup. Pandora Radio, Oakland, USA.

HitPredict: Predicting Hit Songs Using Spotify Data (2018). Poster presentation at the Stanford CS 229: Machine Learning Poster Session. Stanford University, USA.

Using Spotify Audio Features to Study the Evolution of Pop Music (2018). Poster presentation at the Workshop for Women in Music Information Retrieval at the 19th International Society for Music Information Retrieval Conference. Paris, France.

Music, Familiarity, and Preference (2018). Poster presentation at Stanford Music and the Brain Symposium: Performance. Stanford University, U.S.A.

Performances

Hege, A., Wang, G., Noufi, C., & Georgieva, E. (2022). The Furies: A Laptopera - “Don't Sh Stop Please.” In New Interfaces for Musical Expression (NIME) 2022. Virtual.

Hege, A., Wang, G., Noufi, C., & Georgieva, E. (2021). The Furies: A Laptopera - “Glorious Guilt.” In New Interfaces for Musical Expression (NIME) 2021. Virtual.

Audiovisual performance (2019). Invited solo performance, BrainMind Summit. Stanford University, U.S.A.

Glorious Guilt (2019). Invited performance with the Stanford Sidelobe Laptop Orchestra. Digital Civil Society Conference. Stanford University, U.S.A.