The gradient method is based on the first-order term in the Taylor

expansion for

![]() . By taking a second-order term as well and

solving the quadratic minimization problem iteratively, Newton's

method for functional minimization is obtained. Essentially,

Newton's method requires the error surface to be close to

quadratic, and its effectiveness is directly tied to the

accuracy of this assumption. For most problems, the error surface can

be well approximated by a quadratic form near the solution. For this

reason, Newton's method tends to give very rapid (``quadratic'')

convergence in the last stages of iteration.

. By taking a second-order term as well and

solving the quadratic minimization problem iteratively, Newton's

method for functional minimization is obtained. Essentially,

Newton's method requires the error surface to be close to

quadratic, and its effectiveness is directly tied to the

accuracy of this assumption. For most problems, the error surface can

be well approximated by a quadratic form near the solution. For this

reason, Newton's method tends to give very rapid (``quadratic'')

convergence in the last stages of iteration.

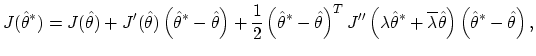

Newton's method is derived as follows: The Taylor expansion of

![]() about

about

![]() gives

gives

Definition. Newton's method is defined by

When

![]() , the

answer is obtained after the first iteration. In particular, when the

error surface

, the

answer is obtained after the first iteration. In particular, when the

error surface

![]() is a quadratic form in

is a quadratic form in

![]() , Newton's

method produces

, Newton's

method produces

![]() in one iteration, i.e.,

in one iteration, i.e.,

![]() for

every

for

every

![]() .

.

For Newton's method, there is the following general result on the existence of a critical point (i.e., a point at which the gradient vanishes) within a sphere of a Banach space.

Theorem. (Kantorovich)

Let

![]() be a point in

be a point in

![]() for which

for which

![]() exists, and set

exists, and set

Proof. See Goldstein [2], p. 143.