Musical Interfaces for Novices: Exploring Structured Interactions

Making music with others is great fun for those with musical skills. But how can we make it accessible to people without musical training? Start with a simple interface for making musical sound. But once you know how to play, how do you decide what to play?

In this HCI experiment, conducted by myself and Sebastien Robaszkiewicz, we created a simple touch-based instrument where two users play duets together. To this we added a game-like interaction which directs players to different parts of the "musical space" at different times. We compared the users' experience and the musical results between these "structured interactions", and unstructured free play.

The results are discussed in the abstract for our 2012 UIST poster.

Here's a demo of the system in action:

Structuring Musical Interactions for Novices - UIST 2012 Demo

LaunchCode: Integrating the Launchpad and Code in Ableton Live

LaunchCode is a set of python scripts which allows the Novation Launchpad and Livid Code controllers to work together as an integrated controller for Ableton Live.

With LaunchCode the Launchpad and Code use the same session box (the yellow box in Live's session view), and both address the same eight tracks encompassed by the session box.

The idea is to use the Launchpad to launch clips and scenes and the Code to control mixer and device parameters. Details and code are available on the GitHub page.

TweetDreams: Music for live Twitter data

TweetDreams is a multimedia music performance piece that uses real-time Twitter data.

The software retrieves from Twitter all currently occuring tweets containing certain terms.

The tweets are sonified as short melodies and displayed graphically. Similar tweets are grouped into trees of similar melodies, and

new tweets cause neighboring tweets to also play their melodies, creating percolating textures of

musical tweets.

TweetDreams is designed to allow the audience to participate in the music-making process.

During a performance the audience is invited to tweet during, and their tweets are given special musical and visual prominence.

Additionally, during a performance anyone in the world who tweets with one of the search terms becomes

an unwitting participant in the piece. In this way TweetDreams creates musical interactions which are both local and global.

TweetDreams was developed by myself, Jorge Herrera, and Carr Wilkerson. It was premiered at the MiTo Settembre Musica festival in Milan in 2010,

and has been performed at a number of events including TEDxSV, and NIME 2011 in Oslo.

For performance videos checkout the TweetDreams project page,

and for more technical details read our NIME paper.

Here's me discussing the piece at NIME 2011:

SoundBounce: Throwing sounds around with iPhones

SoundBounce is an instrument I developed for the Stanford Mobile Phone Orchestra.

It is implemented as an iPhone app in which players must "bounce" sounds with an upward paddling motion

to keep them alive. They can also throw sounds to other players by pointing at them and making a throwing gesture.

A performance of SoundBounce involves the performers bouncing sounds

and throwing them amongst the ensemble in various patterns. The piece ends with a game

in which performers can knock out other performer's sounds, and the goal is to be

the last person standing.

SoundBounce was premiered at the i, MoPho

concert at CCRMA, December 2009, and has been played elswhere, including NIME 2010 in Sydney.

Here's a video of the premiere performance (thanks to mashable):

Technical details are available in my NIME paper.

Movement Sonification

I've created a number of sonifications of human movement. Here's a demonstration of sonifying muscle activation in running and walking:

The WaveSaw: A flexible instrument for direct timbral manipulation

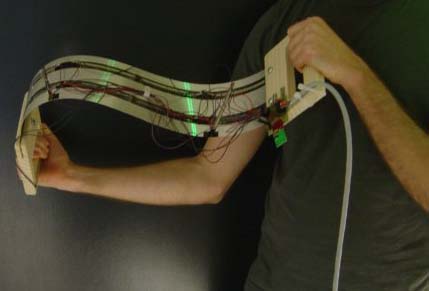

The WaveSaw is a new instrument designed as a class project at CCRMA with myself, Nate Whetsell, and John Van Stoecker.

Bend sensors on the "blade" are used to recreate the approximate shape of the blade in the computer.

This shape is used as one period of an audio waveform (a.k.a. "scanned synthesis"), and it can also be used to shape the spectrum of that sound.

Add in multiple "reflections" of the shape, and timbral insanity ensues.

Here's a demo of the WaveSaw and its features:

The WaveSaw: A Flexible Instrument for Direct Timbral Manipulation

For more details see our 2007 NIME paper