Tracking Dynamics of Musical Engagement Using EEG

Self-reports of engagement or preference can suffer from response bias, and delivery of continous behavioral responses can distract from the stimulus at hand. We explore objective measures of musical engagement using inter-subject and stimulus-response correlation of EEG and other physiological responses. We study the extent to which temporal organization of acoustical events into music is critical to achieving listener engagement, and investigate relationships between musical engagement and expectation, novelty, and repetition.

Selected publications

Dauer et al. (in revision). Inter-Subject Correlation during New Music Listening: A Study of Electrophysiological and Behavioral Responses to Steve Reich's Piano Phase. bioRxiv 2021.04.27.441708. doi:10.1101/2021.04.27.441708 [preprint] [data]

Kaneshiro et al. (in review). Inter-Subject EEG Correlation Reflects Time-Varying Engagement with Natural Music. bioRxiv 2021.04.14.439913. doi:10.1101/2021.04.14.439913 [preprint] [data]

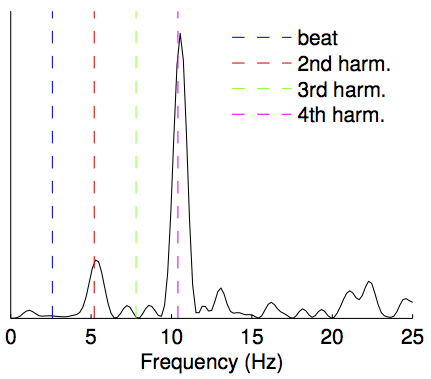

Kaneshiro et al. (2020). Natural Music Evokes Correlated EEG Responses Reflecting Temporal Structure and Beat. NeuroImage 214, 116559. doi:10.1016/j.neuroimage.2020.116559 [web] [data] [code]

Gang et al. (2017). Decoding Neurally Relevant Musical Features Using Canonical Correlation Analysis. In Proceedings of the 18th International Society for Music Information Retrieval Conference, Suzhou, China. doi:10.5281/zenodo.1417137 [pdf] [data] [code]

Kaneshiro (2016). Toward an Objective Neurophysiological Measure of Musical Engagement. Doctoral Dissertation, Stanford University. [pdf] [web]

Musical Engagement using Commercial Services

Complementary to neuroscience work, we study how users interact with real-world music services. To date we have uncovered relationships between Shazam queries and music structure segmentation using an open dataset of over 100 million queries; used quantitative and qualitative approaches to understand user practices surrounding social playlists; and investigated how music fandoms utilize social media to connect and organize.

Selected publications

Park & Kaneshiro (2021). Social Music Curation That Works: Insights from Successful Collaborative Playlists. In Proceedings of the 2021 ACM Conference on Computer-Supported Cooperative Work & Social Computing (CSCW) 5, Article 117, 27 pages. doi:10.1145/3449191 [web]

Park et al. (2021). Armed in ARMY: A Case Study of How BTS Fans Successfully Collaborated to #MatchAMillion for Black Lives Matter. In Proceedings of the 39th Annual ACM Conference on Human Factors in Computing Systems (CHI), Article 336, 14 pages. doi:10.1145/3411764.3445353 [web]

Kaneshiro et al. (2019). Characterizing Musical Correlates of Large-Scale Discovery Behavior. Machine Learning for Music Discovery Workshop at the International Conference on Machine Learning (ICML), Long Beach, USA. [pdf]

Park et al. (2019). Tunes Together: Perception and Experience of Collaborative Playlists. In Proceedings of the 20th International Society for Music Information Retrieval Conference, Delft, The Netherlands. doi:10.5281/zenodo.3527912 [pdf]

Kaneshiro et al. (2017). Characterizing Listener Engagement with Popular Songs Using Large-Scale Music Discovery Data. Frontiers in Psychology 8:146. doi:10.3389/fpsyg.2017.00416 [web] [data]

Park & Kaneshiro (2017). An Analysis of User Behavior in Co-Curation of Music Through Collaborative Playlists. In Extended Abstracts for the Late-Breaking Demo Session of the 18th International Society for Music Information Retrieval Conference, Suzhou, China. [pdf]

Representational Similarity Analysis

Representational Similarity Analysis (RSA) involves comparing pairwise distances across a set of stimuli to study the underlying structure of the set, and to compare representations across modalities. We use EEG classification to derive distance measures. This technique serves to reveal dynamics of the representational space over the time course of the brain response, to identify spatial and temporal components of interest in the response, and to compute a neural similarity space that can be compared to perceptual and computational representations.

Selected publications

Wang et al. (in preparation). MatClassRSA: A Matlab Toolbox for M/EEG Classification and Visualization of Proximity Matrices. bioRxiv 194563. doi:10.1101/194563 [preprint] [code]

Kong et al. (2020). Time-Resolved Correspondences Between Deep Neural Network Layers and EEG Measurements in Object Processing. Vision Research 172, 27-45. doi:10.1016/j.visres.2020.04.005 [web]

Losorelli et al. (2020). Factors Influencing Classification of Frequency Following Responses to Speech and Music Stimuli. Hearing Research 398, 108101. doi:10.1016/j.heares.2020.108101 [web] [data] [code]

Kaneshiro et al. (2015). A Representational Similarity Analysis of the Dynamics of Object Processing Using Single-Trial EEG Classification. PLoS ONE 10:8, e0135697. doi:10.1371/journal.pone.0135697 [web]

Kaneshiro et al. (2012). An Exploration of Tonal Expectation Using Single-Trial EEG Classification. In Proceedings of the 12th International Conference on Music Perception and Cognition and the 8th Triennial Conference of the European Society for the Cognitive Sciences of Music, Thessaloniki, Greece. [pdf]

Research Tools and Datasets

With the goal of promoting reproducible research and facilitating cross-disciplinary research, our group takes steps to make our experimental data and analysis software publicly available to the research community. This includes collections of behavioral and EEG responses; mobile applications used to conduct research; and experimental, analysis, and visualization tools used to arrive at published results.

Selected software

Wang et al. (in preparation). MatClassRSA: A Matlab Toolbox for M/EEG Classification and Visualization of Proximity Matrices. bioRxiv 194563. doi:10.1101/194563 [preprint] [code]

Nguyen and Kaneshiro (2018). AudExpCreator: A GUI-Based Matlab Tool for Designing and Creating Auditory Experiments with the Psychophysics Toolbox. SoftwareX 7, 328-334. doi:10.1016/j.softx.2018.09.002 [web] [code]

Kim et al. (2012). Tap-It: An iOS App for Sensori-Motor Synchronization (SMS) Experiments. In Proceedings of the 12th International Conference on Music Perception and Cognition and the 8th Triennial Conference of the European Society for the Cognitive Sciences of Music, Thessaloniki, Greece. [pdf] [github]

Selected datasets

Losorelli et al. (2017). NMED-T: A Tempo-Focused Dataset of Cortical and Behavioral Responses to Naturalistic Music. In Proceedings of the 18th International Society for Music Information Retrieval Conference, Suzhou, China. doi:10.5281/zenodo.1417917 [pdf] [data] [code]

Kaneshiro et al. (2015). Object Category EEG Dataset. Stanford Digital Repository. [web]

Kaneshiro et al. (2015). EEG-Recorded Responses to Short Chord Progressions. Stanford Digital Repository. [web]

Kaneshiro et al. (2013). QBT-Extended: An Annotated Dataset of Melodically Contoured Tapped Queries. In Proceedings of the 14th International Society for Music Information Retrieval Conference, Curitiba, Brazil. doi:10.5281/zenodo.1415756 [pdf] [web] [github]