A Musical Controller for Performance, 2003~04

CCRMA, Stanford University

Zune Lee, Moto Abe

Prof. Max Mathews and Bill Verplank

GOAL

![]()

![]() Sound

Cocktail is an effort to link human gestures and enjoyment to making music

while connecting tastes of cocktail to those of sound. The goal is to

link the acts of making music and making a cocktail and to produce pleasing

music at the same time we produce a pleasing drink.

Sound

Cocktail is an effort to link human gestures and enjoyment to making music

while connecting tastes of cocktail to those of sound. The goal is to

link the acts of making music and making a cocktail and to produce pleasing

music at the same time we produce a pleasing drink.

DEMO VIDEO: Shaker Demo (mpeg/13.3M), Table Demo (mpeg/4.6M),

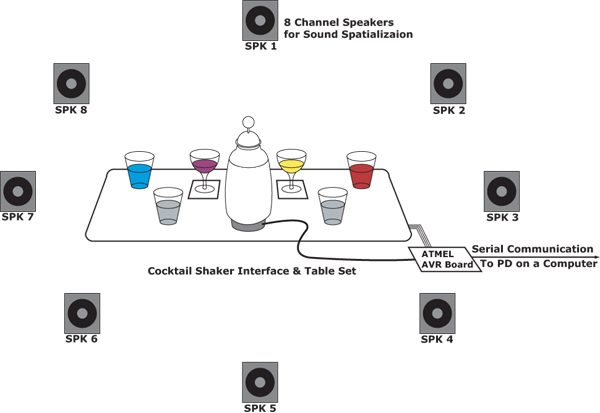

OVERALL STRUCTURE:

|

Fig

00: The overall structure of Sound Cocktail |

INTERFACES:

Shaker interface and Table Interface

![]()

![]() We

instrumented a cocktail shaker and a special board on which we could place

the cocktail shaker, glasses, and bottles containing the various ingredients.

The cocktail shaker can control musical sound by 6 gestures: rotate, up-down,

right-left, front-back, cap rotate, and cap-press.

We

instrumented a cocktail shaker and a special board on which we could place

the cocktail shaker, glasses, and bottles containing the various ingredients.

The cocktail shaker can control musical sound by 6 gestures: rotate, up-down,

right-left, front-back, cap rotate, and cap-press.

![]()

![]() The

devices and sensors we used include Atmel ATMega163 AVRmini,

accelerometer, FSR, encoder, etc. For the software programming,

we used C programming, and Pure Data.

The

devices and sensors we used include Atmel ATMega163 AVRmini,

accelerometer, FSR, encoder, etc. For the software programming,

we used C programming, and Pure Data.

![]()

![]() The

implementation used several sensors, with their signals going into the

AVRmini board, getting processed and sent out to PD using Open Sound Control

(OSC). Seen as Fig01, the primary sensor in use was an

accelerometer that we used to detect motion of the cocktail shaker in

the X, Y, Z, and rotation dimensions. This was mounted on the side of

the shaker, primarily because it was easier to detect rotation movement

than when the accelerometer was mounted on the bottom (as we had originally

intended to do). We also incorporated a rotary encoder on the top of the

shaker.

The

implementation used several sensors, with their signals going into the

AVRmini board, getting processed and sent out to PD using Open Sound Control

(OSC). Seen as Fig01, the primary sensor in use was an

accelerometer that we used to detect motion of the cocktail shaker in

the X, Y, Z, and rotation dimensions. This was mounted on the side of

the shaker, primarily because it was easier to detect rotation movement

than when the accelerometer was mounted on the bottom (as we had originally

intended to do). We also incorporated a rotary encoder on the top of the

shaker.

|

|

|

Fig01: Cocktail shaker interface

(80 * 300 mm) |

||

The shaker discriminates 6 types of motions, shown in Fig02. The accelerometer on the side of the shaker detects the first 4 motions, whereas the rotary encoder and the button detect the last 2 motions. The position of the accelerometer and its geometry are shown in Fig 03.

|

Fig02: Basic motions of the shaker |

|

Fig03: Sensor position and geometry. |

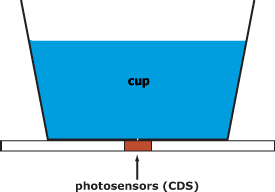

The cocktail board had several photosensors underneath,

and the bottles we used for ingredients had black bottoms - thus we could

detect when the bartender lifted the bottle off the board and use that

to trigger sounds. As well, there were two spots for glasses, with FSRs

embedded so that when a cocktail was poured into the glass the weight

pushed down on the FSR and we could use that to control part of our composition.(See

fig04 and Fig 05)

|

Fig04:

Photosensro of the cocktail board |

|

|

Fig05:

FSRs on the cocktail board |

|

Fig06:

Coctail Shaker and Table Set |

PERFORMANCE

performance movies: Performance01(mpeg/27M),

Performance02(mpeg/29.3M)

![]()

![]() The

performance idea was to have a ¡°bartender¡± making a cocktail and in the

process s/he controls or triggers various musical sounds to simultaneously

create a musical composition.

The

performance idea was to have a ¡°bartender¡± making a cocktail and in the

process s/he controls or triggers various musical sounds to simultaneously

create a musical composition.

![]()

![]() Performance

idea

Performance

idea

![]()

![]() 1)

Putting each ingredient in the cocktail shaker in a given order.

1)

Putting each ingredient in the cocktail shaker in a given order.

![]()

![]()

![]()

![]() (Such

ingredients are pre-mapped to the particular recorded wave clips.)

(Such

ingredients are pre-mapped to the particular recorded wave clips.)

![]()

![]() 2)

Mixing the whole ingredients into one by above mentioned gestures.

2)

Mixing the whole ingredients into one by above mentioned gestures.

![]()

![]() Those

gestures are pre-mapped to particular sound properties to control them.

Those

gestures are pre-mapped to particular sound properties to control them.

![]()

![]() For

example,

For

example,

![]()

![]()

![]()

![]() up-down

gesture : controlling volume of the whole sound

up-down

gesture : controlling volume of the whole sound

![]()

![]()

![]()

![]() front-back

gesture: adding echo effect to the particular sound

front-back

gesture: adding echo effect to the particular sound

![]()

![]()

![]()

![]() right-left

gesture: controlling panning of the stereo sound (spatialization)

right-left

gesture: controlling panning of the stereo sound (spatialization)

![]()

![]()

![]()

![]() cap-press

: inserting some sound to the playing music

cap-press

: inserting some sound to the playing music

![]()

![]()

![]()

![]() cap-rotate:

controlling sampling rate of the particular sound

cap-rotate:

controlling sampling rate of the particular sound

![]()

![]()

![]()

![]() rotate:

filtering the entire sound (dissolve filter)

rotate:

filtering the entire sound (dissolve filter)

![]()

![]() 3)

Purring the mixed cocktail into a glass to generate a resultant sound.

3)

Purring the mixed cocktail into a glass to generate a resultant sound.

![]()

![]() 4)

Drinking the cocktail and the sound

4)

Drinking the cocktail and the sound

SOFTWARE PROGRAMMING

![]()

![]() We

programmed the AVR to do as much pre-processing of data as possible -it

read the sensor values, subtracted DC bias, and applied a low-pass filter

to the readings before sending them to the computer. It also detected

the onset of shaking (the downstroke) and rotation values, both of which

involved comparing X, Y, and Z values and assigning meanings to them (e.g.

when the X value was large but the Y and Z were small that would be rotation).

We

programmed the AVR to do as much pre-processing of data as possible -it

read the sensor values, subtracted DC bias, and applied a low-pass filter

to the readings before sending them to the computer. It also detected

the onset of shaking (the downstroke) and rotation values, both of which

involved comparing X, Y, and Z values and assigning meanings to them (e.g.

when the X value was large but the Y and Z were small that would be rotation).

![]()

![]() The

PD patch took in the sensor values and did all the work of starting music

samples and controlling the musical properties. We had a long debate about

what mappings were appropriate and whether the cocktail shaker should

act as a controller, triggering sounds, or a musical instrument in itself,

changing sound/musical properties (amplitude, tempo, etc). We finally

decided on a combination, whereby we used various acts to trigger sound

samples, but then used other sensor values to control the musical properties

of those sound samples.

The

PD patch took in the sensor values and did all the work of starting music

samples and controlling the musical properties. We had a long debate about

what mappings were appropriate and whether the cocktail shaker should

act as a controller, triggering sounds, or a musical instrument in itself,

changing sound/musical properties (amplitude, tempo, etc). We finally

decided on a combination, whereby we used various acts to trigger sound

samples, but then used other sensor values to control the musical properties

of those sound samples.

![]()

![]() For

the mixing portion of the musical composition, we implemented two ideas.

The first was to have several parts of a single musical composition (drums/bass/piano)

start independently of each other (each was triggered by lifting an ingredient

bottle) and, as the cocktail was mixed, slowly slow down/speed up to meld

together seamlessly and become one piece of music. We used variable delay

lines in PD to accomplish this goal. Each piece started off with a certain

amount of delay added in, and as the shaker is moved about the delay slowly

reduces until at a specified number of shakes the delay is made equivalent

to the built-in delay of the song (making the three parts synchronized).

The second idea was to have four different music segments mix together

to create a new musical composition. We used a repetitional filter to

accomplish this task- adding reverberation and a delay to a sound, controlling

it to give a certain tone but still retain the characteristic of the original

sound. This allowed us to put together certain sounds and, by using the

filter, ¡°dissolve¡± them together such that elements of them could be still

be heard in the mix but the sound was no longer the same as it had been.

Using a combination of band-pass & low-pass filters, we could control

the tone of what the resulting sound became (the reverbed & delayed

sound), and thus make the four different music segments become four different

notes of a chord, gradually. The filter allowed them to ¡°morph¡±, in a

way, retaining some of their original character so that it seemed to the

audience they were still part of the sound, even as they were being changed

to become chord tones. Here is the PD

patch.

For

the mixing portion of the musical composition, we implemented two ideas.

The first was to have several parts of a single musical composition (drums/bass/piano)

start independently of each other (each was triggered by lifting an ingredient

bottle) and, as the cocktail was mixed, slowly slow down/speed up to meld

together seamlessly and become one piece of music. We used variable delay

lines in PD to accomplish this goal. Each piece started off with a certain

amount of delay added in, and as the shaker is moved about the delay slowly

reduces until at a specified number of shakes the delay is made equivalent

to the built-in delay of the song (making the three parts synchronized).

The second idea was to have four different music segments mix together

to create a new musical composition. We used a repetitional filter to

accomplish this task- adding reverberation and a delay to a sound, controlling

it to give a certain tone but still retain the characteristic of the original

sound. This allowed us to put together certain sounds and, by using the

filter, ¡°dissolve¡± them together such that elements of them could be still

be heard in the mix but the sound was no longer the same as it had been.

Using a combination of band-pass & low-pass filters, we could control

the tone of what the resulting sound became (the reverbed & delayed

sound), and thus make the four different music segments become four different

notes of a chord, gradually. The filter allowed them to ¡°morph¡±, in a

way, retaining some of their original character so that it seemed to the

audience they were still part of the sound, even as they were being changed

to become chord tones. Here is the PD

patch.

SOUND SPATIALIZATION

![]()

![]() The

other major output module to implement was the spatialization model. In

each case we were starting off with 4 sounds, and feeding them to 8 speakers

in some combination. From the beginning we had planned to spatialize them

in accordance with some movement of the shaker, to make the performance

more interesting and the ¡°mixing¡± more realistic. Initially, all four

sounds are set to come from one speaker only (each one from a different

speaker). The spatialization module had inputs for spread, rotation, front-back

& left-right balance, and master volume. The number of shakes increased

the spread, as the more ¡°mixed¡± the sound cocktail became the more the

sounds spread out through the room (spread was merely a function of how

many speakers a sound was in simultaneously). The rotation control controlled

the rotation of a sound (which speakers a sound was in, if it had a spread

of three speakers which three speakers at any given time), making sounds

spin around counter-clockwise. Left-right & front-back balance (relative

levels of a sound in the front or back four speakers and left or right

four speakers) was controlled by the equivalent motion of the shaker back

and forth or left and right, and volume was controlled by shaker movement

up and down.

The

other major output module to implement was the spatialization model. In

each case we were starting off with 4 sounds, and feeding them to 8 speakers

in some combination. From the beginning we had planned to spatialize them

in accordance with some movement of the shaker, to make the performance

more interesting and the ¡°mixing¡± more realistic. Initially, all four

sounds are set to come from one speaker only (each one from a different

speaker). The spatialization module had inputs for spread, rotation, front-back

& left-right balance, and master volume. The number of shakes increased

the spread, as the more ¡°mixed¡± the sound cocktail became the more the

sounds spread out through the room (spread was merely a function of how

many speakers a sound was in simultaneously). The rotation control controlled

the rotation of a sound (which speakers a sound was in, if it had a spread

of three speakers which three speakers at any given time), making sounds

spin around counter-clockwise. Left-right & front-back balance (relative

levels of a sound in the front or back four speakers and left or right

four speakers) was controlled by the equivalent motion of the shaker back

and forth or left and right, and volume was controlled by shaker movement

up and down.

![]()

![]() We

used the ingredient bottles to trigger the start of the samples, in each

case. The different segments started from different speakers. The cocktail

shaker motion than was used to change amplitude and spatialization - as

just described the signals were moved around the listener as the cocktail

shaker was moved. This was to reinforce the idea of mixing music together

and make the melding of the different samples smoother and more gradual.

Finally, the rotary encoder was used to control the final music mixing.

We

used the ingredient bottles to trigger the start of the samples, in each

case. The different segments started from different speakers. The cocktail

shaker motion than was used to change amplitude and spatialization - as

just described the signals were moved around the listener as the cocktail

shaker was moved. This was to reinforce the idea of mixing music together

and make the melding of the different samples smoother and more gradual.

Finally, the rotary encoder was used to control the final music mixing.

![]()

![]() Taking

everything into account, however, we accomplished our goal. Our performance

showcased Zune Lee mixing two cocktails, and producing two pieces of music

as well, one with separate out-of-sync instruments slowly mixing together

to become a piece of music, and the other separate musical segments dissolving

into a unified chord. Our aim was this, creating a cocktail of liquid

and music at the same time, thus making an enjoyable drink and producing

a musical representation of the experience, a partky where the bartender

serves sound cocktails.

Taking

everything into account, however, we accomplished our goal. Our performance

showcased Zune Lee mixing two cocktails, and producing two pieces of music

as well, one with separate out-of-sync instruments slowly mixing together

to become a piece of music, and the other separate musical segments dissolving

into a unified chord. Our aim was this, creating a cocktail of liquid

and music at the same time, thus making an enjoyable drink and producing

a musical representation of the experience, a partky where the bartender

serves sound cocktails.

(C) 2003~ 2004

Zune Lee, Abe Moto All Rights Reserved.