220C XIANG ZHANG

-

1.Acoustics Maps

The goal is to create an iPhone application for the measurement of room acoustics -- sort of a Google Earth meets WeatherBug (set up a web-accessible weather station at your elementary school) for creating an acoustic map of the world. A set of “calibrated” balloons would be packaged with a microphone having an iPhone interface, and used to record balloon pops, along with location information and images of the space being measured. The recorded balloon pops would then be converted into impulse responses of the space. The idea would be to create acoustics maps of Stanford, etc. Audio clips could also be recorded to create soundscape maps. A web interface could process uploaded sounds based on the estimated impulse responses.

-

2.Stanford Reverberant Spaces

Make impulse-response measurements and acoustics models of Memorial Church, the Cantor Museum lobby, or the Hoover Institute lobby. Both Memorial church and the Hoover Institute lobby are domed spaces. Cantor Center has a marble-revetted interior. The measurements and models will be used to process music recorded at CCRMA. In order to assess the quality of the computer acoustic models we will repeat the recorded performance in the actual space.

-

3.Dry Recording of Music for Reverberant Spaces

Explore methods to capture dry recordings of pieces intended for performance in Explore methods to capture dry recordings of pieces intended for performance in reverberant spaces. The idea is to make recordings with reverberant monitoring fed back via headphones and analyze difference in performance under different acoustic feedback conditions, e.g., short or long T60s.

-

4.Web-Based Acoustics Processing

Development a web interface and signal processing to allow site visitors to upload recorded material and have it processed according to desired reverberation characteristics. In accomplishing this task, it is necessary to account for the original acoustic characteristics imprinted on the source material. The idea is to shorten the desired impulse response according to reverberation times estimated from the source. In this way, the processed output will acquire the acoustics of the targeted new space.

PROJECT 1

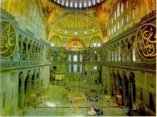

ICONS OF SOUND:

HAGIA SOPHIA AND THE AESTHETICS OF THE SEA

Unexpected transmission delay is definitely not welcomed by any kind of music. However, in the perceptual perspective, to compensate delay, our nerve system tend to adjust it self to adapt to the constant external delay caused by the system or environment.

From previous experiment, it is proved that a delay rate within 30ms is perceptually acceptable for human psychological system to have basic musical information cooperation. However, for speech and oral conversation, our brain is able to accept up delay from 50ms (threshold for hearing perceptual delay detection) to up to several second. And delay time within 200ms is barely noticeable for very long distance conversation.

For music information communication, especially for live performance for recording involving more than one person or sound source, delay is a big issue for every terminal that evolves human-beings. The purpose of this project is to use this unexpected delay as a perceptual resource, combined with people’s physical acoustic reaction (such as clap, tapping for vocal), to generate music that directly from their reaction from the delay of the system. This new approach is able for new music style. Also the multiple person involved resolution is entertaining for ordinary participants (without decent perceptual hearing or audio knowledge) to realize the effect of delay.

This project uses up to five participants. At each point, there is a set of sensors: one microphone, one headphone and one camera (optional). The main terminal is setup in the stage to monitor all the participants, including their live performance, reaction, and also the delayed sound the generated. The terminal is also responsible for sending steady tempo signal to notify each person. All the participants are isolated relatively in difference rooms. What they could hear is the sound signal from each of the other participants. Each participants tries to cater to the over all tempo beat, or generate their own acoustic sound. Some of the sound are processed before sending to the audience by the terminal, and some of them are directly send to the audience. Because the delay amount varies from each person, and also from the terminal (It is a simulation of people locating between very long different distance), their it is really difficult to adjust their psychological compensation of the delay as delay time goes longer. However, instead of just compensating the delay, they are able to find something steady in the delay. The result is a music piece from the use of the delay.

Project 2

The beauty of delay:

Delay composing implementation for multiple terminal computer music