Research Seminar in Computer Generated Music

Spring 2019

Done with quarter. Enjoy your summer.

Started putting together the website for the demo. Created a basic version with the filtering and video stats

Worked with ffmpeg to put it all together. Created one movie with soundtrack from a single condition/stage trials.

Wrote to script to systematically generate the images save them to a folder and also generate the wav file to go with it. Played around with the idea of generating 64 channel sound. Didn't get a chance to test it out and am running out of time

Generated the wav file by inverse STFT on the spectrogram. Sounds ok. Wrote a python script to generated a single wav file for all trials of a subject.

Created a preso for class presentation using single samples. Link

Wrote python scripts for extracting and sonifying single samples of spectrogram.

Gave a lightning presentation about ML and AI in class Link

Looked into using single sample waveform as sonification. The results were not great but still worth pursuing

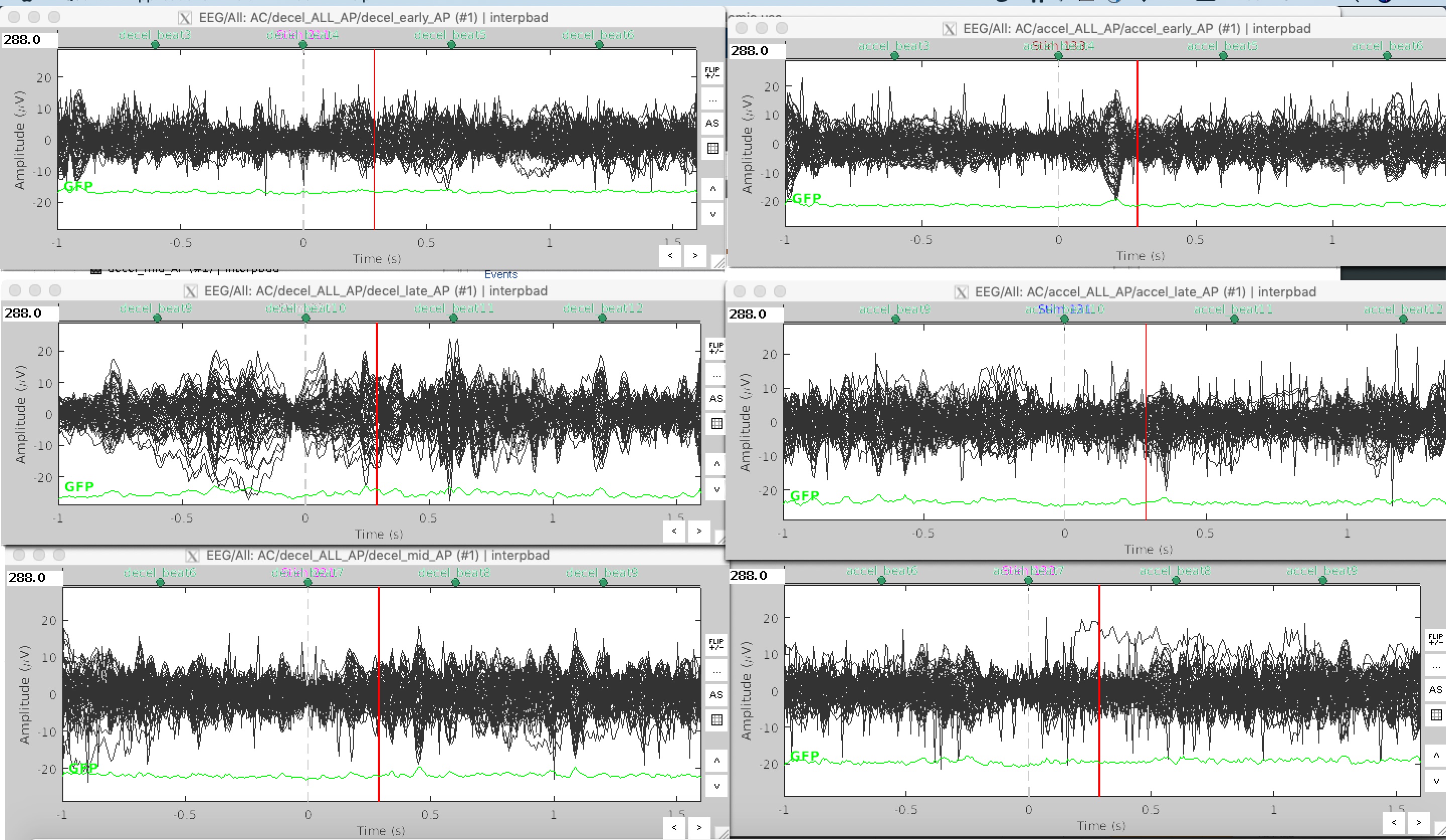

Checking out some of the timeseries graphs for potential pre-processing and sonification options.

Switching to a sonification approach for 220C. While the classifiers are churning away, there might be interesting crossovers to BCI if I can start with some sonification. On an unrelated note, the new computers for curry and BCI are here and setup in the EEG lab

Hand tuned some of the CNN layers and ran the same intra/inter subject runs. Not much improvement but again intra does better than inter.

Spent some time trying to do intra vs inter subject classification. As expected Intra subject is performing much better than inter confirming the hypothesis that EEG data is more similar within trials involving a single subject than across subjects

Results so far on traditional Machine Learning models (SVM, RandomForest) have been very poor and comparable to random classifier (~35% for 3-class and ~13% for 9-class). A 18 hidden layer CNN with Max pooling gives ~20% accuracy on 9-class with PCA data and no pre-processing.

Next steps are to pre-process the PCA data to restrict to Beta band (13-30 Hz) and tighten the time window. Once the model has been trained with this data, we can do further analysis of the hidden layers to identify salient features.

Presented the 220C Project Idea of using EEG data to generate beats. Currently it is an offline decoding/classification and synthesis project. Short preso

This work builds on the dataset from Emily Graber's PhD Dissertation.