ANTIPHON

NewArt.City Link: https://newart.city/show/antiphon

Video demo: https://vimeo.com/528500131

ANTIPHON is a networked, interactive, real time, audiovisual performance environment exploring the conceptual framing of Antiphonal music. The instruments are networked and act as volumetric sound sculptures built to be performed live. They are controllable via remote server creating a networked, highly accurate, audiovisual representation distributed across all participant's web browsers.

ANTIPHON is a virtual installation and virtual musical instrument. It is a reaction to this current moment in time. With the loss of live music venues, can we once again feel connected through live virtual performance? There has been a flourishing of online platforms in the last year in direct response to the pandemic. As an exploration of these online spaces. ANTIPHON has been built in several of these venues including Mozilla Hubs, NewArt.City and native three.js. The installation that is presented here provides a contrast to Hubs in the hope that a scholarly conversation might be engaged exploring the strengths and weaknesses of these types of platforms.

At its core ANTIPHON is an exploration in the poetics of networked audio and the infrastructure of the internet. Seeking to understand if it is possible to feel connected in a virtual performance environment, ANTIPHON is centered around a volumetric sound sculpture/instrument controlled via Max in real time and synchronously broadcast across every audience member's browser. The virtual chamber creates an environment where audience members can gather to watch the performance of a 3D audiovisual composition. Building off years of research before the pandemic, these technologies can be adopted for unique experiences during this period. ANTIPHON, 'the opposite voice', is ultimately a conceptual work moving data through the internet engaging a distributed array of web browsers as a decentralized, synchronized, geographically dispursed synthesizer.

INSTRUCTIONS

Viewers can see ANTIPHON running live at the following link: https://newart.city/show/antiphon the work will be producing a realtime sound sculpture for the event in 3D space at the center of the environment. Users can use standard WASD movement controls and spacebar to jump.

Extrapolations on a Vega Banjo

Hubs Link: https://hubs.mozilla.com/scenes/pY8Hdiu

Video demos: https://youtu.be/YngvGSoawAc, https://www.instagram.com/p/CPHTpOTAgKY/

I made a banjo. You can walk on the banjo. Hear the banjo. See the banjo. Experience existence in the same plane as the banjo.

PROGRAM NOTE

A musician knows every inch of their instrument. They hold and caress their piece of machinery every day, and spend untold amounts of time sitting with it, touching it, and feeling the surface of it against their skin.

Extrapolations on a Vega Banjo takes another look at this soulful, intimate connection between performer and artifact by shrinking the visitor down to interact with the instrument from a unique vantage point. The work is a re exploration of the instrument, as its physical proportions are extrapolated to unearthly sizes, and its sonic aura is recorded, similarly extruded, and recontextualized around the virtual instrument into the positions from which they originated. Functionally, the piece operates as much as a sound walk as an installation, as the work empowers the performer to explore, create, and have artistic agency over their own musical experience, in addition to presenting sonic and visual materials. As with many of my previous drone-based works, the material in this piece serves a dual purpose, providing both primary musical material, as well as serving as a mirror on which to examine the mediating interface. In this case, it encourages the visitor-performer to experiment with the movement capabilities found within the Mozilla Hubs environment.

While a physical manifestation of this work in principle might involve a gargantuan real-to-life recreation of a Vega Banjo, in preparing this virtual reality installation, I chose to lean heavily into aesthetic decisions that more closely aligned with the medium.

Overall, this virtual reality installation explores issues of connection and familiarity, prompts a reexamination of those things which you hold close, and empowers visitors to create and mediate their own sonic experience.

Immediations

Hubs Link: https://hub.link/KESsngN

Immediations is a participatory XR installation exploring the interface between artificially constructed online environments and technologically mediated real-world interactions. Designed for a single participant at a time, the Hubs virtual environment sets the stage for a mysterious Zoom video encounter with a live and autonomously sounding large gong. Audio and video input from the user combines with reembodied sound techniques to create an exploratory and ritualistic experience. Headphones are recommended. While the built-in computer or laptop microphone can be used, participants with a higher quality microphone or headset will have a richer experience. Participants are requested to adjust the audio and video settings within the Zoom application.

Immediations is an exploration into the nature of technology as a reflection of our selves and a non-human “other.” The tools we have created, whether a simple musical instrument created from a hanging piece of metal or the complex electronic infrastructure of virtual and digital environments, function simultaneously extensions of our nature, active constructors of our identities, and seemingly autonomous independent entities. This installation navigates these myriad and contradictory forces by creating a virtual environment in which a lone person navigates to find a connection to a geographically distant musical instrument. The instrument, a large gong, responds with transformations of the participant’s sound and image, tailoring them to its own sonic and visual properties. This response returns again back across the web to the human source, the relationship evolving, driven both by the participant’s input and the gong’s independent trajectory.

There is no time-limit to the installation, participants may engage as long as they wish.

The gong responses are primarily a form of reembodied sound– an electroacoustic technique in which surface transducers are used to transmit sound into the bodies of resonant objects. In this installation, sounds from the participant are captured, analyzed, resynthesized, and projected into the instrument. The sounds from the gong are then picked up with a microphone and mixed with spatialized granular synthesis using a rotating set of buffers capturing audio from Zoom. A set of generative algorithms determines the type of response and varying mix of synthesis techniques.

Likewise, video from zoom is transformed and projected onto the surface of the gong, modified by an evolving set of algorithms. Both audio and video programming were done in Max.

The gong, a 38” Paiste Sound Creation Earth Gong, is housed in the Arts Department at Rensselaer Polytechnic Institute.

INSTRUCTIONS

This installation is in two parts:

• A Hubs environment which the participant is free to explore. Upon joining the room, if the “People” tab in the upper right corner is greater than 1, that means someone else is using the installation: please exit the room and return later. This installation is designed for one participant at a time.

• Zoom: Within the Hubs environment is a Zoom meeting link. Upon clicking the link, the user is taken out of Hubs to their own Zoom application and asked to connect to a meeting. For best results, please use the Zoom application (rather than browser interface) and adjust the settings to those listed below. Once the meeting has begun, sounds made by the participant will generate the installation response.

The participant can end the encounter simply by clicking “Leave” in Zoom. Hubs will still be available in the browser – please remember to leave the Hubs room or close eyour browser window when finished.

Installation requirements/suggestions:

Hubs: Firefox is recommended, as it supports stereo sound in Hubs (not all browsers do).

Zoom: Participants should download the Zoom application, rather than use the browser interface. No account is necessary. The correct Zoom settings will aid in a better experience in the interactive portion of the installation. When Zoom is launched, participants will need to adjust the following settings:

Audio settings:

Zoom audio settings are accessible through the lower left corner of the main Zoom window. The following items should be checked:

• Show in-meeting option to “Turn On Original Sound” from microphone.

• High-fidelity music mode

• Stereo audio

• (Echo cancellation should be unchecked)

Within the main window, click “Turn On Original Sound” in the upper left corner.

Video settings:

Be sure your camera is on when in the Zoom environment. It is recommended that you “hide self view” – this can be accessed by clicking the three dots in the upper right corner of your video image. “Hide self view” allows you to only see the other participants.

This information is also displayed within the Hubs environment.

More complete information on Zoom setting can be accessed here:https://tinyurl.com/fbphsnfz

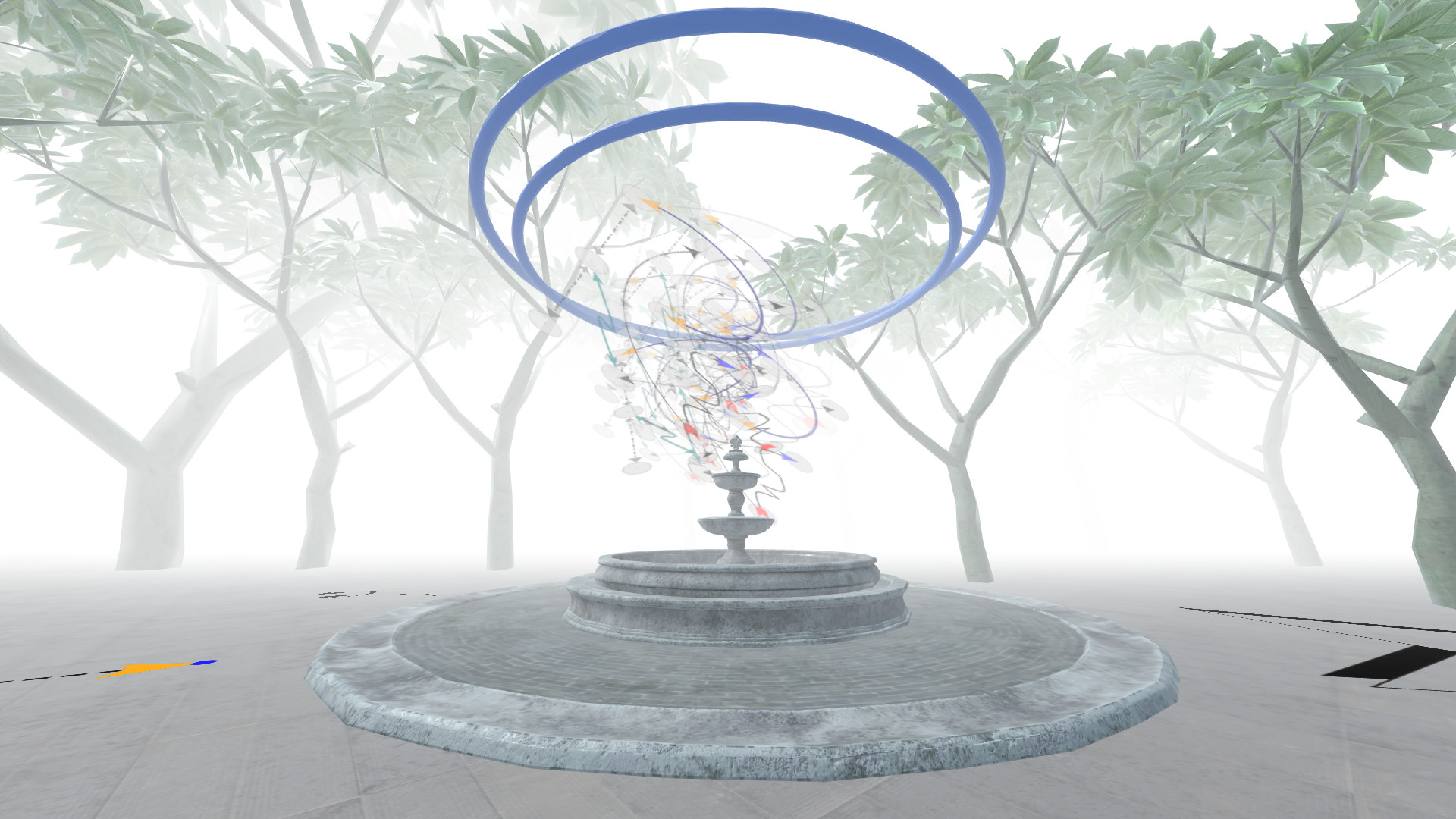

FOUNTAIN

Hubs Link: https://hubs.mozilla.com/EYbxYFn/fountain

FOUNTAIN is an immersive sound installation built for Mozilla Hubs. Generative audio and phase patterns are installed across a virtual forest environment, creating an evolving soundscape for visitors to explore. In the center of this space is a fountain. Instructions prompt visitors to contribute their own sounds to the fountain during 'gallery hours' in response to the soundscape, creating a participatory sound event akin to a Pauline Oliveros sonic meditation or Cageian 'musicircus'. This environment will be activated at select times throughout the ICMC conference with live avatar performances responding to the sonic environment.

Dystopia Trilogy

Hubs Link: https://youtu.be/HuoUVKb45Tk

Video demo: https://youtu.be/HuoUVKb45Tk

Dystopia Trilogy is a WebVR composition consisting of three movements inspired by the author's experiences during the seven months of social restriction in 2020. These memories are interpreted and developed as narrative ideas and divided into three major pandemic episodes—infectious- period, lockdown, and new-normal. Three virtual environments were created to simulate the actual events to manifest the narrative notions using sound recordings and 3D scanning assets made during and after the lockdown. A specific compositional strategy that utilized Hubs' spatial sound feature was applied within this piece by placing the pre-composed materials at a particular location with specified distance values. Therefore, each sound only hearable at a given range according to the user's location within the virtual environment.

PROGRAM NOTE

Dystopia Trilogy is a WebVR composition consisting of three movements inspired by the author's experiences during the seven months of social restriction in 2020. These memories are interpreted and developed as narrative ideas and divided into three major pandemic episodes—infectious- period, lockdown, and new-normal.

Three virtual environments were created to simulate the actual events to manifest the narrative notions, in which sound recordings and 3D scanning assets made during and after the lockdown are utilized to construct the virtual space that functions as memory lines connecting between the past and the present over the digital domain.

The symbolism approach is adopted to contextualize the usage of visual elements in conjunction with the narrative idea. The first movement displays human figure videos and animated COVID-19 models, whereas, on the second movement, the 3D model of human bodies (legs and hand) is placed within a square format to restrict the user's locomotion. At the environment's center rests a human heart model that is randomly beating and rotating infinitely, which symbolizes the desperateness feeling. The third movement is structured upon the distorted objects of 3D scanning surrounding Melbourne after the lockdown. These decimated 3D objects portray the lifeways changes of new normal where conjointly with video footages offers a twofold visual presentation.

A specific compositional strategy that utilized Hubs' spatial sound feature was applied within this piece. Instead of fixed-structured music, pre-composed materials are placed within particular premises with specified distance values, meaning each sound only hearable at a given range according to the user's location within the virtual environment. Therefore, the musical structure and experience become subjective matters for each user based on their interaction (spatial location) when exploring the digital space.

INSTRUCTIONS

- You can visit each environment through the links displayed on the landing page

- To return to the landing page, you will find a direct link at the corner of each environment

- You can navigate by pressing AWSD on the computer keyboard and controlling the camera

view over the mouse movements

- Please use headphones as audio is binaurally rendered

Spatialization IPEM, Being Hungry

Hubs Link: Hubs Link: https://hub.link/KNpAnqj

Video: https://youtu.be/ZGhWqs9JQho

In 2020, Tineke De Meyer and Duncan Speakman released their album ‘The House was Alright'. All musical works on their album were originally stereo-mixed. For ICMC 2021, they established a collaboration with the IPEM research team of Ghent University to recreate one particular work of the album, ‘Being Hungry’, into a 3D spatialized audio version to be presented in a multimodal Mozilla hubs environment.

Aural Weather Étude

Hubs Link: https://hubs.mozilla.com/oW8dKFj/aural-weather-etude

The Aural Weather Étude is a sound installation that explores the spatial dimension as the primary means of organizing music and the devolution of narrative agency to the audience, inspired by the wall drawings by Sol Lewitt and the work of Bernhard Leitner. The work is based on a set of pitches and timbres that move rapidly back and forth between points in space. We chose eight spatial points that, in the installation setting, coincide with irregularly positioned loudspeakers. Each point is numbered in clockwise order. A pitch, a panning trajectory, and a slowly modulating additive timbre - with occasional intervention by processed human speech - is associated with each pairing of an even and an odd point. The sound sources move at dynamically changing rates ranging from the upper subsonic to the audible, resulting in a spatialization matrix of 32 sources into 8 loudspeakers, panning at the audio rate.

PROGRAM NOTE

The Aural Weather Étude is a sound installation, the result of our collaboration. The work deals with the matter of installing atmospheres in public space in a generative manner, implemented in the Kronos signal processing language. We started from the instructions given by Sol Lewitt for his Wall Drawing #118, and interpreted them in the context of multi-channel audio and rapid spatial modulation.In our previous projects involving Urban Sonic Acupuncture strategies, panning trajectories have been intentionally avoided, as they seem to imply the existence of a sweet spot or an advantaged point. We have chosen to give up panning altogether, handing agency in spatialization to the visitor/listener, expressed by walking or choosing a listening spot. The present project represents a case in which panning trajectories can be interesting in generating sonic fields, while still not laying exclusive claim to the realm of spatialization. Rapid panning trajectories can be valuable in modulating the space and its perceived atmosphere while delimiting sonic areas and distinctly pinpointing the various intersections of panning trajectories.

We set out to develop a system that can be adapted to a wide range of spatial contexts spanning from audio installations in gallery spaces or concert halls to urban scale sonic interventions. We were interested in a scalable process that can work regardless of the sonic material used, indoors or outdoors, a process that is modular enough to be calibrated in-situ. We looked for inspiration in previous examples of such systems in the visual arts and in the work of sound installation artists. The Wall drawings by Sol Lewitt and the work of Bernhard Leitner, who wanted to draw in space with sound, became the main sources of inspiration to the present work.

INSTRUCTIONS

When you enter the virtual space, the journey starts from outside the installation. Head towards the stairs and enter the main room. Move around freely slowly - there's a speed modifier in preferences - and listen while moving from as many spots as possible. You can also go upstairs and listen to the installation from a distance.

Σum rooms

Hubs Link: https://hub.link/oUL9HqS

This collaborative electroacoustic work takes three sonic works and places them in a singular, audible space. Each of these works is not only a complete composition but a sonic entity that engages with the other sonic bodies in this audible plane. These works move through a three-dimensional sound space and interact with one another, make space for one another, and overshadow one another.

Each of these works was composed independently of the others to be a standalone piece, not simply a component of one larger scale work, therefore creating a poly-piece. Each composer was assigned to explore different frequency ranges at different points in the work -- exploring bandwidth constructively and destructively, creating a sonic virtual space. This work, therefore, explores the sonic domain as an extended reality in which pieces can be composed and placed in space, as well as co-exist with one another, just in the same way three people can occupy a room. Therefore we are creating multiple rooms, with multiple realisations of the same piece that can be experienced and explored.

INSTRUCTIONS

Move (w, s, a, d / left, right, up down) // listen // stop // listen // reposition (mouse click and drag) // listen //

∞

find nodes of intersection // find nodes of silence // always listen //

∞

The Big Crash VR

Hubs Link: https://hubs.mozilla.com/ufU2mgo/the-big-crash

he The Big Crash VR is a nonlinear immersive virtual reality art piece based on data mined from real estate sites. It is part of Malte Steiner's art project The Big Crash and has as thematic departure point the instability of the real estate market as a consequence of extreme gentrification. Something that is actively taking place in all bigger cities around the globe. There is a pending ‘big bubble crash’ awaiting because of this and the piece deals with the increasing uncertainty and its effects on society this movement creates.

The foundation is a software written by Malte Steiner, which harvests the data of some real estate websites from Berlin, e. g. prices and square meters, but also the visuals of the advertisements. These are analyzed with a Machine Learning algorithm, performing an image segmentation and thus separating objects found in these images into picture fragments. The Big Crash VR takes those elements to create a surrealistic virtual reality in which the user can move around freely. They are arranged into bizarre architectonical artifacts which remind on early automatic generated content for digital maps. Some are even recreated as 3D models.

The numerical data like prices and square meters over a certain period of time is used to control a custom software synthesizer implemented in Csound and Max/MSP plus an analogue modular synthesizer to create the Piece For Modular Synthesizer And Real Estate Data and The Chants Of Real Estate Data. Fragments of the pieces are separated and arranged in the Hubs VR world in a multichannel setup for the data sonification. The user has the time to walk or fly through the VR environment and experience different aspects of the spatial sound which changes over time and location.

INSTRUCTIONS

Wander around with the help of the cursor keys or alternatively the key W (forward), A (left), S (backwards) or D (right), or if you are using a VR headset, the appropriate input devices. Observe the large area but also the spatial, virtual multichannel audio. Holding down the shift key speeds up the motion and makes it easier to reach all areas. By moving the mouse you can look around and up.

If you feel adventurous you can toggle to the fly modus by pressing G. Then you are not further ground bound and can even hoover over the buildings. Pressing G again drops you back to the ground.

Isolation Therapy

Hubs Link (single-user): https://redirection-page.glitch.me

Hubs Link (multi-user): https://hubs.mozilla.com/rsTAXsg/6-hours

* PLEASE NOTE: Use the "single-user" link if to experience the experience alone. This will create a specific room for you when you click on the link.

Isolation Therapy adapts Mozilla Hubs, a social platform for web-based virtual reality, as an environment for continuous site-specific sound installation to simulate an experience of forced isolation. Exploring interactive forms of musical composition, the work appropriates audio spatialization algorithms to create multiple hyper-localized sound fields throughout the space. These sound fields contain audio loops of varying lengths, which can only be heard in close proximity and appear to interweave in a nonlinear timeline. Thus, each audience member will compose their own listening experience as they navigate the confined space.

Upon entering the work, the audience will find themselves in the unlit corner of a small dilapidated room, surrounded by an ocean-like soundscape. They are free to explore the room, but unable to exit through the closed door. Visually, the room appears to have an uncanny mixture of tactile details that simulate the real world (e.g., the crumbling paint and chalk drawings on the walls) and bizarre features that signal the virtual space (e.g., the florescent light and pieces of paper floating in mid-air). Aurally, as one moves around the room, they will experience a rapidly shifting soundscape with extreme localization that defy the laws of the physical world. There are sounds that evoke strong physical sensations, such as beating sine tones and wall-scratching sounds, as well as disembodied voices carrying reverbs of enormous spaces, which contradict the appearance of this small room.

The multitude of contradictions in this virtual confinement creates a sense of tension, serving as a metaphor for the paradox of autonomy on the internet. Morita Therapy, a type of psychotherapy after which this installation is modeled, “treats” patients by locking them up in a small room with little human contact for up to months at a time. It has been practiced at one of the largest internet addiction treatment centers in China since 2008, which targets not only people’s online gaming habits, but also depression, teenage dating, homosexuality, amongst other qualities that could be deemed undesirable to desperate parents. Using the contested diagnosis and treatment of internet addiction as a mirror, “Isolation Therapy” reflects growing forms of social control such as censorship in the increasingly institutionalized cyberspace.