Download my 256A Project Code from here

This is my Music 256A final project (I call it the SoundScape) . The explanation of the project has been divided into the following five parts :-

1. Introduction and Motivation

2. Application Components

3. Software Components

4. Setting up the project on your system

5. Resources

When I heard the Virtual Barbershop for the first time six years ago (which employs HRTF's and spatialized audio rendering) it triggered my curiosity and imagination. The first time I heard it I was impressed by the fact that our ears could be employed in this way to fake 3D positions of audio sources. Rendering spatialized audio for headphones using HRTF's has always excited me and I felt this course gave me a perfect platform to build something on the lines of rendering HRTF convolved audio and spatialized audio.

My application aims at creating different soundscapes within a single screen so that the listener can experience different acoustic spaces (both synthetic and ambient) within the same restricted area. The components and the different soundscapes are explained in the following part.

The abstractness of the user interface reflects my thoughts, my love for what I do, my past experiences and everything I appreciate in this world.

Before I dive into the components, I just want to inform that the majority of the project has been developed using OpenFrameworks and its addons, OpenAL Soft and the OpenAL EFX extension.

1. The Spatialized MIDI playing area :- This soundscape lets the user play the MIDI notes and instruments in the 3D audio scene that I have created in my application. Each note is depicted using a graphic (a bokeh in this case) and can be moved around in the GUI (works best with a touch screen). The device screen lets the user move the notes in the Z and X axis . Y axis displacement is randomly selected within a small range ( negative to positive values on the y-axis ).

2. Ambient World Soundscape : - This soundscape lets the user move everything the mic hears from the outside world in the 3D audio scene that we have created in our application. If the headphones provide decent noise cancellation, moving the ambient environment around you in the 3D sound field around your head sounds interesting

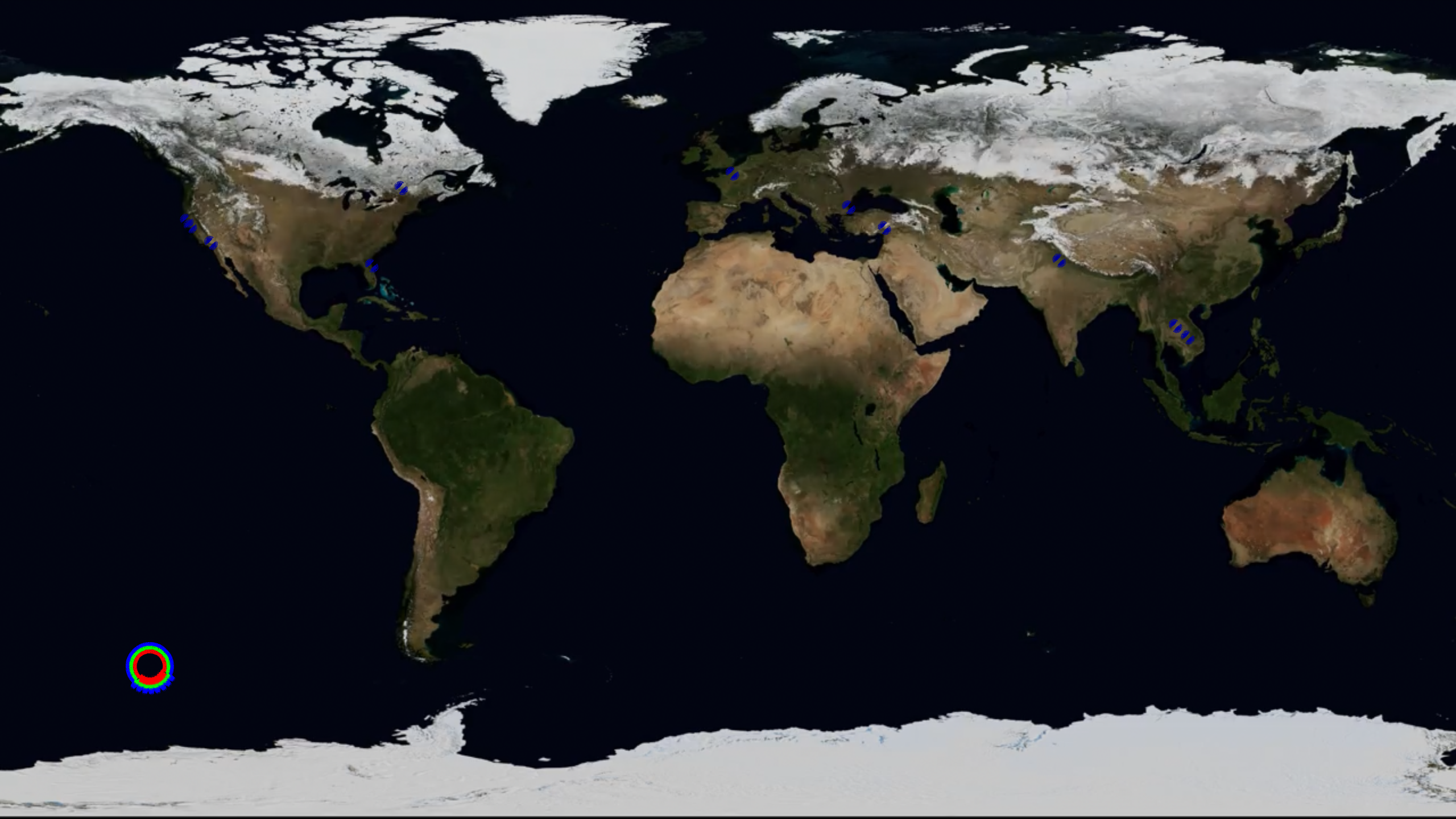

3. Earth View SoundScape :- This view provides a bigger soundscape by presenting a earth simulation with the geo-tagged audio recorded by a user around the globe being mapped onto the earth map. All these audio recordings are also getting spatialized using the same spatialization method. An audio file parser parses the audio files to get the latitude and longitude information and then maps these latitudes and longitudes onto the earth simulation

4. Listener SoundScape :- Remember the listener has its own soundscape i.e the the listener has its own sound field which must mingle with the sound field of the sources to actually experience the audio in 3D. Thus the listener in the application (depicted as an abstract alien) has to physically move to the different soundscapes to experience them.

5. Distance Models :- I have employed an exponential model for the decay of the sound sources with the increasing distance from the listener. The sound levels remain constant within a specified radius and then the sound level dies out exponentially after the specified distance from the source.

6. Real Time Reverb :- The application gives you an option to add reverb from four different locations ( St. Peter's Basilica, an auditorium, underwater and a synthetic psychotic location). This lets you experience the soundscapes within different acoustic spaces from real and synthetic environments.

7. Movement of soundscape :- Each sound source, listener, ambient environment can be moved in the 3D audio space that is being created through my application. I guess the importance lies a lot on the position of the listener as that is how we hear whatever there is out in the environment.

8. MIDI :- As the application lets you play on a MIDI keyboard, the MIDI forms another important part of the application.

My application has various software components, they are as follows :

1. Audio Scene Creator class : This class handles the 3D rendering and the HRTF convolution of the audio. It provides the following functions:-

2. Convolution Thread Class :- This class performs the fast convolution that renders the real time reverb for the ambient environment ( the real time mic input). This is a parallel thread ( ofThread() openframeworks ) that runs parallel to the main thread to perform the low latency convolution for the real time mic input. A separate thread was made for this purpose as the convolution can be a slower process( even though a fast low latency implementation) and can block the faster graphics rate of the main thread.

3. OfApp Class:- This class handles the main chunk of the application features. I see this as a central class to which everything is connected. It provides the following functions :-

Install OpenAL Soft or get it from source : OpenAL_GitHub.

To configure OpenAL to use HRTF follow these steps very carefully.

Once your OpenAL has been setup correctly we need to link it with your project in Visual Studios :

a. OpenAL Soft : A great API that I have employed for the HRTF rendering and getting some reverb effects to work

b.OpenAL: The original OpenAL API that doesn't support HRTFs but gives you a detailed description about the functionality of the API and its functions.

c.ofxMIDI: The openframeworks MIDI addon that I employed to

d.HRTF's from IRCAM : The pool of HRTF's from which I picked up the ones that suited my head( by listening to all of them ) and used for 3D rendering .

e. Fast Convolution :

1. Good Read

2. Github Repo's : FFT Convolution , SSE- Convolution

f.EFX effects Extension for OpenAL guide

I will be developing a less abstract version of this application for the iOS in the next one month so look out for that. Also for the earth view I will try to get as many geo-tagged audio recordings I can and then try to create a web version of the earth view and put it out on my website