Strange Instruments (2018)

At a certain point, I felt stuck working on VRAPL. I felt like I didn't know what to implement next because I wasn't sure why someone would use such a programming language. So, I knew I needed to return to basics and find that out.

I created a series of "strange instruments" in VR, focusing on musical interactions that would be impossible in physical reality.

(I also worked on creating "strange environments" at the same time.)

On this page:

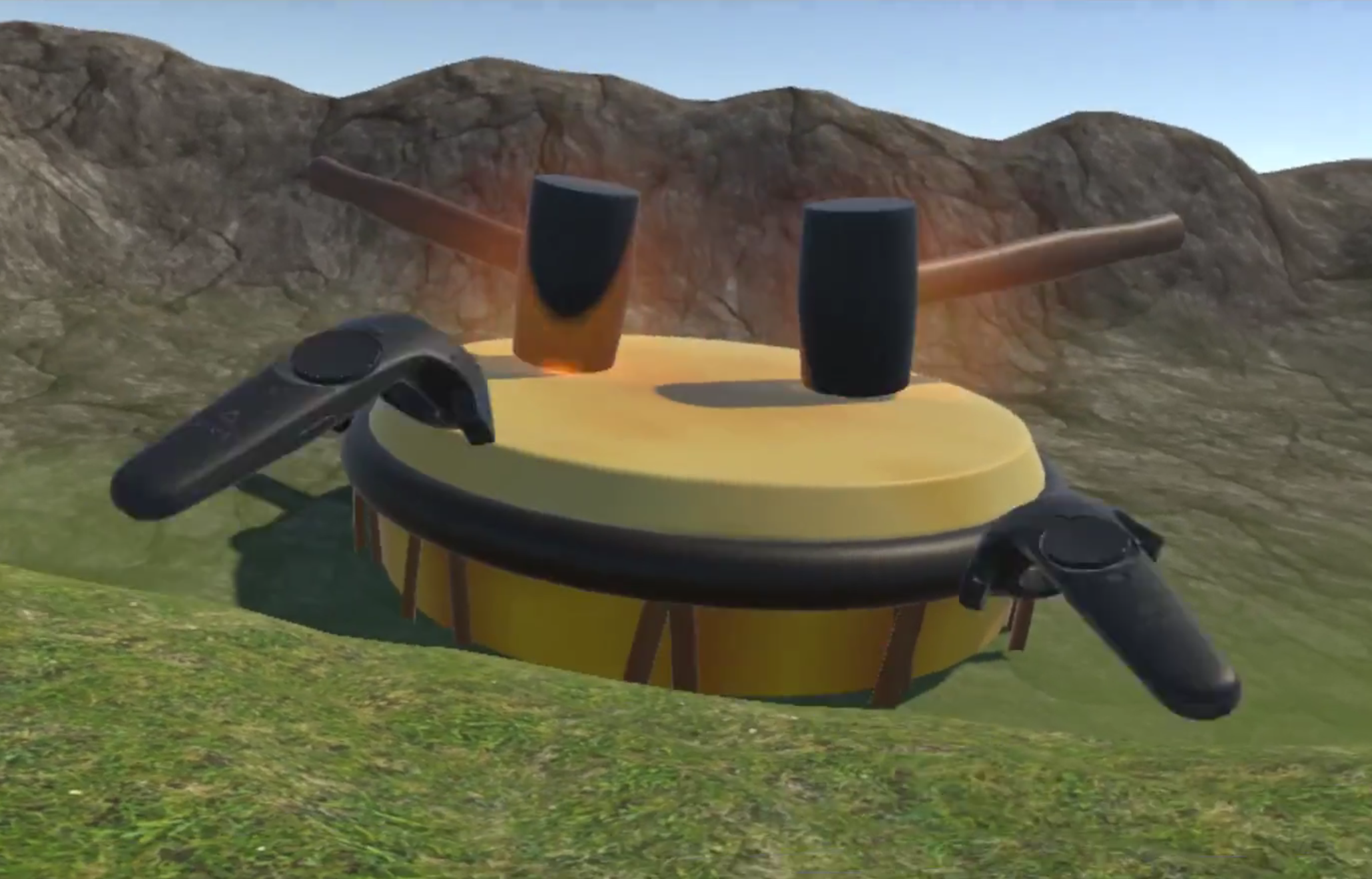

Canyon Drum

The focus of Canyon Drum was to methodically think through all of the different ways one could possibly play a drum that was very large and very far away in VR. Ultimately, I didn't feel any of them were totally successful, but some had their merits.

Extendy Hands

This was the first method of playing a distant, large drum I tried. It followed this paper's method.

The basic idea is that within a limited range, your virtual hands mimic your real hands, but as you reach out, they travel exponentially away from you.

It was pretty awful no matter how I toyed with the sensitivity. I couldn't ever get the hands to intersect with the drum.

Laser Pointer

This is the most basic possible interaction. It involves a "point and click." Here I press the touchpad to generate a laser pointer and click the touchpad to fire it.

This affords playing on different areas of the drum, but not control over intensity.

I have also done it with the trigger, but that felt more awkward than the touchpad press.

Matching Hand Velocity

This method propagates the velocity of the controller to the velocity of the mallet head. It feels pretty natural because there's a (nearly) 1:1 visual mapping between what is happening with your hands and what you are seeing with the mallets. (It's not exactly 1:1 because the mallet can't keep moving through the drum as your hand keeps moving down.) Matching velocity worked much better than using velocity to add force or matching angular velocity.

There is a haptic feedback to the controller on each collision. This enables you to be more precise because you can feel with your hand exactly when the stroke is ending, instead of having to rely on inter-modal communication from your eyes/ears to inform your arm to stop moving downward.

The drum's mesh springs outward more if the drum is hit with more force. Here I increased the spring constant so that the drum springs back into position more quickly. I think that this version looks more natural, so I ended up leaving it on these settings for now.

Local Surrogate Surface

Here's a surrogate drum surface: there's a small transparent disc in front of you, and wherever you hit the disc with your controller, there is an analogous hit on the drum, far away. This allows for both choosing the position and controlling the intensity of the hit.

I've programmed the drum so that it uses different sound files depending on what region of the drum was hit. 5% from the edge uses an edge-like sound; a region near the edge uses a less intense hit, and the main center of the drum uses the main sound file.

Air Drumming

This is an implementation inspired by a Luke Dahl 2014 air drumming paper. He found that a peak in acceleration magnitude was a close indicator of when an air-drummed sound should be played.

I don't have access to an accelerometer, so I estimated acceleration and jerk as frame-wise differences of velocity and acceleration. Then, I play a note when the acceleration magnitude is above a threshold, when the current jerk is negative (acceleration decreasing) and the previous jerk was positive (acceleration increasing), and when the velocity is vaguely down (within a 45 degree downward cone).

It needs to be debounced because there are several peaks. And the first peak is not always the correct peak, so sometimes a big stroke is interrupted by a weak hiccup in the stroke. But it's possible to quickly learn a technique that usually doesn't have those.

I tried adding haptic feedback, but it tripped me up and I kept playing double-notes. However, when I delayed the haptic feedback by 50ms (chosen after trying 40ms and 60ms too), then it actually felt really natural.

Unfortunately, because of the debounce, you can't play really fast with this method. I found that the "happy" medium between quick notes and no accidental notes was a debounce of 0.25s per hand. So, the fastest you could play is a little more than 3 notes per hand, or an overall tempo of 6 notes per second.

Air Swinging

An unexpected extra affordance of the air drumming interaction mechanism: if you swing the controller around, then the hits correspond to the points in time when you inject more energy into the swing to keep it going. So that feels really satisfying -- it doesn't mimic the gesture at all, but it does mimic that when do you something forceful with your hand, a sound happens.

If you let the motion slow down so it has a gravity-fall before you swing it back up, there are 2 hits -- one for the gravity fall and one for the injecting energy back into the system. That also feels super good.

Strange Flute

I created a "strange flute" to mimic some of the interactions of using a flute while also having some interactions that would only be possible in VR. The distance between your hands controls the pitch by lengthening the flute, which is clamped to whole midi note values but slews between them. This feels hard to control, but is otherwise fine. I might try connecting the two controllers with a rubber band to see if that makes it easier to control the pitch. The max range also needs to be tweaked, since it feels difficult to hold my arms out so wide. (In software, I put the maximum distance at 2 Unity units, beyond which the note doesn't change; however in practice my hands can only go as wide as 1.5 Unity units so perhaps it would feel more satisfying if I don't make any changes to pitch beyond, say, 1 Unity unit.)

The amount of twist between your hands constrains the flute, making it appear redder and sound distorted. This works pretty well, but I wish that the distortion sounded better. (I guess I wish the flute sounded better too!)

You activate the flute by blowing into the headset microphone; an envelope follower measures how loud you are blowing and sets the volume of the flute accordingly. This is super unsatisfying. There's an unavoidable lag between the microphone input and output due to the way that Unity handles microphone inputs; this is pretty frustrating to use.

Hair Instruments

Here I have two working "hair"-based instruments! You use them by holding them or placing them on your head in VR.

The first works sort of like flexible wind chimes. If you hit a segment with a hammer, then it starts ringing out with its pitch, and keeps doing so until it stops moving. (The volume is mapped to the movement.) Each individual segment has a different pitch, from a pentatonic scale. Each individual segment has its own audio source and thus sound is spatialized to each individual segment. This first instrument doesn't work well when you put it on your head or move it -- it tends to have a lot of spasming. Sometimes it can be difficult to target the exact segment you want to hit. That's something to consider fixing in a future version.

The second instrument is a little more of a work in progress. Here, the volume of each segment is just controlled by its movement all the time. This second instrument is gentler and more conducive to being put on your head -- you can play with its strands by pulling at them or by headbanging your hair around you. It needs more specificity in sound, though, because it's not musically interesting for very long to hear all the notes at once. This setup does allow you to turn off the instrument by laying it on the ground, though.

Shake Marimba

This is a strange marimba that remembers the last note you have hit. When you move the controller that hit it, it plays an ethereal sound. When you move the controller really fast, you start to hear the impact, as if your controller is the marimba and something is bouncing around inside of it.

This is implemented by using a sound file for the marimba tones, and doing granular synthesis on that sound file for the controller sounds. The gentler sounds are from the midpoint of the file, and the harder sounds are from the beginning of the file.

The goal of this instrument was to make an instrument that had an affordance that was not present in the real version of its instrument, as well as an instrument that lent itself to some kind of actual (odd) performance. I had a lot of fun shaking around my controllers!

And, I actually didn't feel like I needed to wear the VR headset for this because I could find the marimba bars by feeling and sound. If I were concerned with hitting the right notes, then I would use the headset.