Human Computer

Interaction

Human Computer

Interaction

RECOGNIZING SHAPES AND GESTURES USING SOUND AS FEEDBACK

...interaction between people and computers requires knowledge of both the machine and the human side. The users must be the center in the design of any computer system.

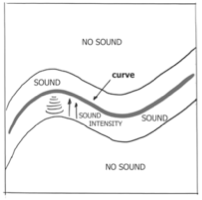

A system has been developed, which allows the users the possibility of recognizing 2D shapes and gestures using only the sound as feedback. Some curves are loaded in the system, but the user has no access to any visual information. The goal is to identify these curves, using different input devices such as a mouse, a pen table, a mobile device or others to interact with the system. Sound is generated as the user approaches to any of the curves. The sound allows the user to identify the curves shapes and the gestures made while following the sound. Sound is related to 2D spatial representations, so curves shapes can be easily followed using the sound as only reference.

The system uses sonification techniques and the proprioception sense, which provides a relation between the gesture made by the user and the spatial representation of the curve shape. It requires a perfect synchronization of perceived audio events with expected tactile sensations.

The sound intensity increases as the distance to the curve decreases. As the user approaches to the curve, a sound is generated, which pitch, timbre, duration and intensity can vary according to a specific spatial to sound mapping strategy. The intention is to be used to recognize 2D curve shapes and to capture the gesture made by the user while following the sound.

Curve shapes are represented by means of parametric curves, which are a standard in 2D drawing representation. Since Drawing Exchange Format (DXF) is used to store the graphic information, it is very easy to generate curve shapes using any commercial CAD application and import them into the system.

The analysis of the user motion, the curve representation and the output sound has been computed using MAX/MSP, a visual programming environment specifically designed to simplify the creation of acoustic and visual applications. Using the Jitter module, the graphic output has been also represented in real time.

Multiple curve shapes can be defined into the same scenario, associating different sound pitches, timbres and panoramization effects to each curve. Distances to each of the curves are evaluated as the user interacts with the model.

Any universal pointer device, as a mouse, a pen tablet or a mobile device can be used to control the system, facilitating the human computer interaction and reducing the overall cost of the system.

In the following videos, you can see some demos of the developed system:

Version 1.0, March 2009