Jason Riggs (jnriggs) - Ambisonics

Envelop is a non-profit organization dedicated towards creating the future of ambisonic software, hardware, and live events. Their initial toolkit allows any artist who uses Ableton Live to create and perform ambisonic music using any sound system architected to support ambisonics.

My project arose out of the realization that I wanted to make ambisonic music, but I don't want to change my artist workflow. Moreover, I strongly suspect artists in particular are an especially picky group when it comes to their workflows. Expecting every artist who wants to create ambisonic music to switch over to Ableton seems unrealistic at best and counter-productive at worst.

So, I've created a piece of cross-platform software that will work with any DAW whatsoever. I have only tested it thoroughly in Logic Pro X, but I'm confident that further testing will involve minimal issues across all platforms. It probably works out of the box right now with most of them.

That's it for the technical side of the project. I expect it will be officially incorporated into Envelop's open-source toolkit sometime this summer (I still need to design its UI/UX to match the rest of their tools, as well as add a few unessential but nice features). This won't be trivial, but it's not far off, either.

The artistic side of this project involved me thinking about ambisonic music in the context of a club or other live event environment as opposed to an active, quiet listening scenario like you might find at home or CCRMA's listening room/stage with a small group.

Week 1

Technical:

Had never made an Audio Unit, VST, or any plug-in before. Did research to find the best open-source and free software option available. The priorities were to find something that would cause no licensing issues for envelop, provide a completely cross-platform framework, and be open source itself. After a lot of research, it became clear that the obvious choice was Juce.

Artistic:

Before taking the course, I had created 2 hours of original stereo music which was designed with ambisonics in mind. I intended to convert those stereo tracks into ambisonic tracks. Due to a sad, sad story involving a car theft and broken back-up hard drive, I lost all of the project files :(. However, I learned a lot and developed theories about what musical ideas would be "extra-special" in ambisonics, and which would not. My artistic focus for this course was to decide how I felt about these theories after making some actual ambisonic music that tested them.

Week 2

Technical:

Got development environment set up, refreshed my rusty C++ knowledge, and built the "Hello World" plug-in using Juce.

Artistic:

I sifted through my old stereo tracks (I fortunately had .mp3's of them scattered around my e-mails and phone, so didn't lose everything!). I found that each track tested out different ambisonic ideas. I started work on a single, new house track which combined many of them that I was curious about. Here's a stereo render of that house track idea: Cyberkinetcs (Twilight Mix) It's just a rough draft of some ideas I wanted to explore with ambisonics. I apologize for sharing an unmixed, unfinished, version of anything, but you get the idea :).

Week 3

Technical:

Got the plug-in working in its dumbest possible form, but finished system works from end to end. It has extreme limitations, like that you can't use the DAW to automate parameters, doesn't store memory across saves of the DAW's project file, doesn't allow use of polar coordinates (cartesian only), and so on. But, the software system now has a full heartbeat. :)

Artistic:

More work on the track referenced from the previous week.

Week 4

Technical:

Wrestled with getting my system to work in the CCRMA listening room. This was a long and annoying process which Nando and team were eventually able to solve. It turns out that the mathematics inside Envelop's internal ambisonic library are bugged and need patching by the original authors. The problem is in the encoding phase of HOA lib, the open-source library used internally within Envelop's max patches.

I also had to make a special version of my plug-in which would help Nando and team debug the issues most easily.

Artistic:

Did not create anything, but was able to attend the grand opening of Envelop's system at The Midway, a club in San Francisco. Two 45 minute performances showcased their system. I used this time not just to have fun, but also to think hard about my music in that type of context. My final thoughts at the bottom of this page largely originated that night.

Weeks 5-9 (May 2-June 1 or so)

Dropped out of school+work+life (medical ermeregency!). Withrew from one class (cs143) and did nothing except try to relax and salvage some work in my computer security class (cs155) where possible. Put my ambisonic work on hold entirely. Not recommended! :(

Week 11

Okay, I'm (sort of) healthy again. Happy to be back at it!

Technical:

Got my software from its barely working state to a much more complete state. The system is set up exactly as before, but it now supports all the features I mentioned were lacking during week 3 above. In order to distribute, it still needs work, but it all does what I set out to do.

Artistic:

Made a small ambient music, binaural demo to help show the system during final presentations. I'll upload a binaural example here once I solve the weird issue I'm having recording it. (shake fist)

Final Presentation Thoughts:

I thought hard about what I'd say to a crowd at CCRMA if given the floor for 5-15 minutes. There's way more I could say than fit into this time period, so I'm choosing to focus on what I think is the most interesting theory I've developed all quarter. I've summarized it here:

(A) Suppose you have an ambisonic recording of some heavily synth-based music - no lyrics, just random synths and a kick drum/drum beat. But synths are swirling around all over in space, random little details are everywhere. It’s beautifully spatialized.

(B) Suppose you also have a stereo version of that track (a “dumbed down” version).

Imagine you’re in the listening room at CCRMA alone listening to (A). There are synths and random noises going on all around you. It can be quite an emotional experience.

Now, imagine you’re in a club of 200 people. It has a nice, loud, powerful, clear, sound system. People are facing mostly forward on the dance floor, but they’re constantly moving around. Some people are drunk, some are dancing and moving a lot, some are just bobbing their heads, some are hanging against the wall. It’s deliberate chaos. Imagine you are all listening to (A). I contend that the ambisonic aspect of it ends up making the clubgoers’ actual musical experience not much better, if any, from if they were all just listening to (B).

Now, suppose you replace (A) with a recording full of lots of “highly semantic” sounds. Voices, lions roaring, cars zooming down a highway, you name it. These sounds are ones that anyone at the club is going to hear and be triggered in a very targeted and powerful psychoacoustic way - if the music gets really quiet in the club and then all of a sudden a huge lion’s roar comes from the east wing, everyone feels the same sense of surprise and fear in that direction - it’s a scary thing to hear, like you’re in a horror movie. This experience makes the ambisonic aspect of the club that much more powerful.

I need to look into research on the psychoacoustic effects of spatialization to come up with a non hand-wavey point like the scenario above. Even without this research, though, I have a strong hypothesis. My ambisonic work so far has shown me that the “More semantic” an ambisonic experience is, the more it makes the group, chaotic situation actually different/special compared to the stereo recording at a club. The gulf is not nearly as large sitting in the listening room quietly with just a few people.

Onwards

I'm planning to continue this project on all fronts this summer. Fingers crossed, I'll be back to fully-productive me by the end of June or so. I intend to take 220d with the goal of making this a released, real piece of software that other artists can use, as well as exploring the artistic, psychoacoustic issue I've described above. A research paper or multimedia, possibly interactive blog post might be a good format for exploration. I'm still considering all options.

Thanks to the 220c teaching staff. CCRMA has always been and always will be a sanctuary. Also a special thank you to Nando for his love of ambisonics, and to his dedication in getting involved.

Summer 2017 - mus220d

Weeks 1-2

The goal for my summer work was on the techincal side - making this into a releasable piece of software by September. That meant focusing on a few priorities for the first part of this:

- Determining a final feature spec for the first public version.

- Architecting things so quick changes can be made as problems arise and we receive feedback comes in.

- Architecting things so it would work on as many machines (old, new) and all DAWs.

- Not over-engineering by wasting times on things we'd be likely to throw away.

I met with the Envelop founders inside the envelop hardware system in SF. We looked at the existing software and took a first stab at what we should and should not spec for the first version.

The short story of that meeting was that I'd mimic most of the features from the Max4Live version of the plug-in which works striclty in Ableton live. However, I'd omit some of the less-necessary and space-consuming things like the EQ.

Weeks 3-4

One of the biggest complexities in the software was the introduciton of a 3d visualizer to go along with each instance of the plug-in. This process had a few more gotchas than it should have.

To make the audio-plugin totally cross-platform, the correct choice of framework is the JUCE library for C++. However, most of JUCE is configured for standard GUI elements like buttons, sliders, and knobs, and not for intense 3d graphics work like a game engine.

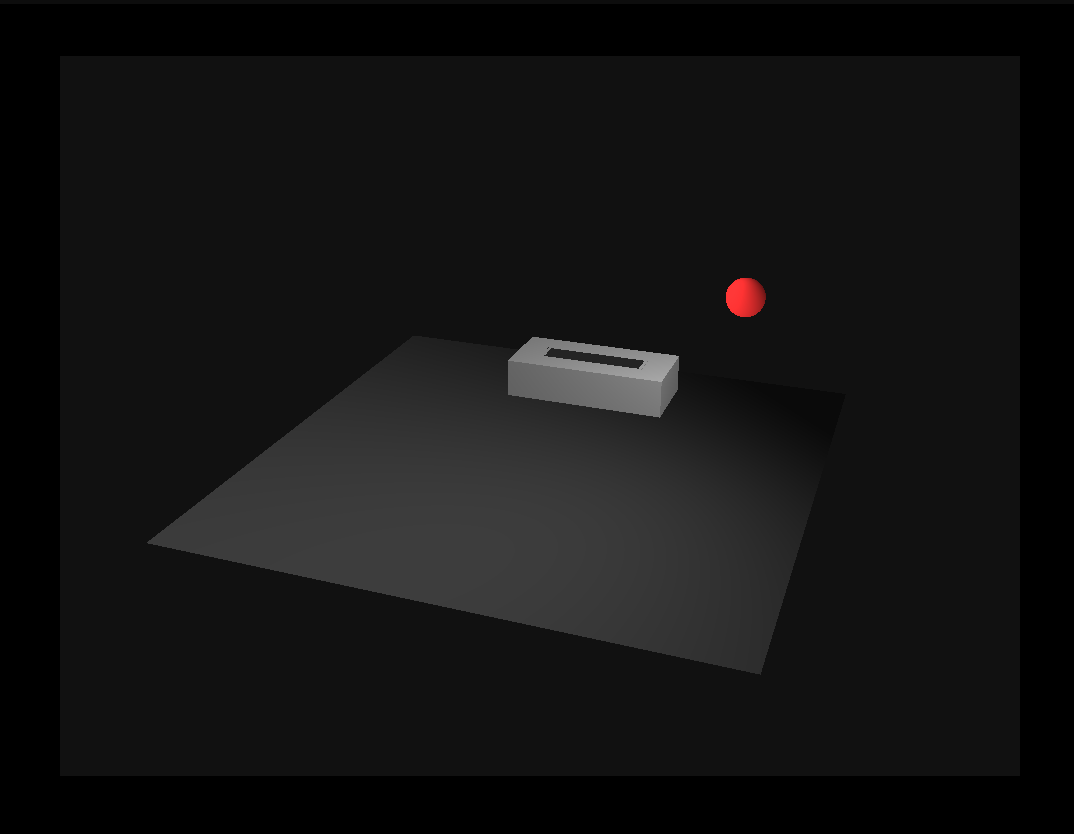

Using the minimal set of OpenGL features that JUCE provides out of the box, I was able to set up a simple scene like this:

Yes, that is actually a 3d world viewed overhead (not just a 2d canvas). But to get it as useful as possible I'd need to write a nice-feeling 3d engine mostly from scratch using some the mostly outdated stuff graphics stuff that JUCE provides. What's odd and time-consuming was with JUCE's current OpenGL integration: while it leverages the latest versions of OpenGL itself (4.0+), it allows for only an extremely old version of the hardware GPU language, GLSL. In particular, JUCE will not allow usage of GLSL versions > 1.2, which is over a decade old. This meant finding ways to mix old GPU code with new CPU-side code, which is not always a great procedure.

Another issue has to do with 3D scientific calculations. One of JUCE's core design philosophies is to provide "everything you'd possibly need" within a single ecosystem so that you don't have to use any outside libraries. However, while JUCE's 3D math libraries include many of the common operations I'd need for fancy graphics calculations, they don't have everything, and what is there is often not as hardware-optimized as it could be. I considered a few approaches like linking GLM into the project, but in an attempt to keep things simple and not prematurely optimize, I opted for modifying Juce's internal scientific calculations library itself. This involved adding the following things:

- Loading for popular wavefront 3d model formats of .obj and .mtl files.

- Operations on 4x4 homogenous coordinate style matrices. Scaling, inverses.

- A 3d 6-degrees-of-freedom camera abstraction for OpenGL's graphics pipeline.

I had some fun reviewing some old linear algebra, where this guide proved extremely useful.

The saddest limitation is that while modern GLSL allows for GPU-side calculations of 4x4 matrix inverses, GLSL 1.2 and below do not, which means that all matrix inverse calculations have to be done CPU-side. Each inverse of a 4x4 matrix invovles around 300 multiplications, so having a scene with lots of objects which require per-fragment, realistic lighting takes a higher hit on the CPU than I'd want.

Fortunately, I was able to hack it to work well enough for now:

If we want to get fancier with the 3d graphics side of things, however, I'll probably need to modify JUCE's internals to accept later versions of GLSL. That would be a non-trival task (it might involve folks on JUCE's team), so I'd like to avoid it in the interest of speed for this project.

Week 5-6

Ironically, making the actual GUI (just the knobs, labels, and layout) involved a lot more JUCE messery than I expected. JUCE provides simple ways for default look-and-feel, but we wanted something a little unique, so I had to re-invent a few graphics wheels to do seeingly simple things like drawing labels at certain positions relative to knobs, having rounded corners on the visualizer window, etc.

I drastically underestimated how much time this would take given idiosynchrasies within the library (Big things like customizing look and feel of sliders, medium things like positioning labels (which is not the simple thing it might seem to be!), and small things like adhering to the correct UTF-8 Standards so this code will compile on all compilers. However, at least this kind of thing is now architected correctly and easy to modify:

Week 7

First, I upgraded to JUCE 5 from JUCE 4, which took longer than I would have liked. They've changed many of the internals of their libraries, and those changes caused my code a few breaking changes.

I also added proper support for mono/stereo channel output for routing to JACK. This involved using a rather non-customizable JUCE UI element called a ComboBox, but it's the best solution for now:

Unfortunately, this channel selection process must be done manually for each new instance of the plug-in (i.e. the plug-in cannot read the output channels from the DAW itself because that information is not actually possible to know for sure - it's too DAW-dependent). The good news, though, is that these channel settings persist across DAW launches, so it'd be easy for us to set up template projects within each DAW and which include enough instances of this plug-in to cover all channels. So, getting started with an example should be easy for any artist, and for anyone who wants to do something more custom.

Lastly, I connected the whole system so that the ambisonic/OSC data and the visualizer are properly linked through a simple API:

Week 8

Here's a video of it all working within Logic Pro X. Sorry that it's laggy. The actual plug-in is smooth; the video's just lagging because of recording full-screen video in Quicktime with the audio piped through Soundflower.

I added a quick, helpful feature where the sound-source moves around the 3d-space with a "tail" behind it so that it's clear at any moment how fast it's moving and which path it's been following. This was a simple feature to add that made a big difference. If I were to implement this a better way, I'd do it using a custom particle emitter from the sound source's current position, leaving a trail of particles in its wake. It'd look and feel better than it does now.

I added another feature where the size of the sound-source inside the visualizer is mapped to the RMS volume smoothed over the last 100 ms or so of audio from the plug-in. This feature is also really helpful because it makes it clear where the object is in space when certain sounds occur. There are a lot more graphical techniques that I could use to emphasize feature-detection on the sounds, but this is the lowest-hanging-fruit of them, so I opted for it first.

Then, I made this website to document all this stuff.

Here's a .zip of the code, if you're curious: repository

I continue to work alongside the Envelop team, and we'll soon enter a testing phase before aiming for a September release of this plug-in alongside their other software. There are a few more features to add, but given how it's all architected, adding most things is extremely quick now. We're almost there!