The Plan: Electroacoustic Piece for Solo Cello and Electronics – will incorporate adc input in combination with effects processing (reverb, echo, addition of chords, alterations in sound intensity). The piece might be titled “The Crane”; I will compose a distant variation of the Korean folk song “Arirang.”

I intend to use Wekinator and incorporate/create crane-like gestures to go along with the piece. Whenever executing the gestures, programmed sounds play back (sound of flying birds, specific cello motives, etc.). Since timing is of importance, gestures will most likely be composed into the piece. Gestures may emulate the Korean traditional crane dance.

What I have here is my starting code for the audio portion of my project. It involves adc input with reverb and echo effects.

The WAV file contains snippets of where I plan to take the piece composition-wise. I did a quick take to see how the cello might sound through the code. First, I play the scale that I plan to utilize in the piece – the Yo scale on D (contains pitches D-E-G-A-B). It is a pentatonic scale used in much of Japanese and Asian music. Next, I play the folk song I plan to variate upon – “Arirang” - in its original form. Then, I show a few cello techniques I plan to incorporate within the piece. These include two-finger tremolo harmonics on one string, harmonics crossing all four strings, bow-crossing tremolo on two strings, pizzicatti strum in upwards then downwards motion, ricochet bow, and lastly ponticello.

For milestone 2, I have pretty much finished my composition - a few minor adjustments I would like to make are possibly adding a pizzicati section and of course, fixing the complicated cello notation.

I have stuck to the idea of making a variation off of the celebrated Korean folk song “Arirang,” also incorporating the theme of a crane within the piece and performance.

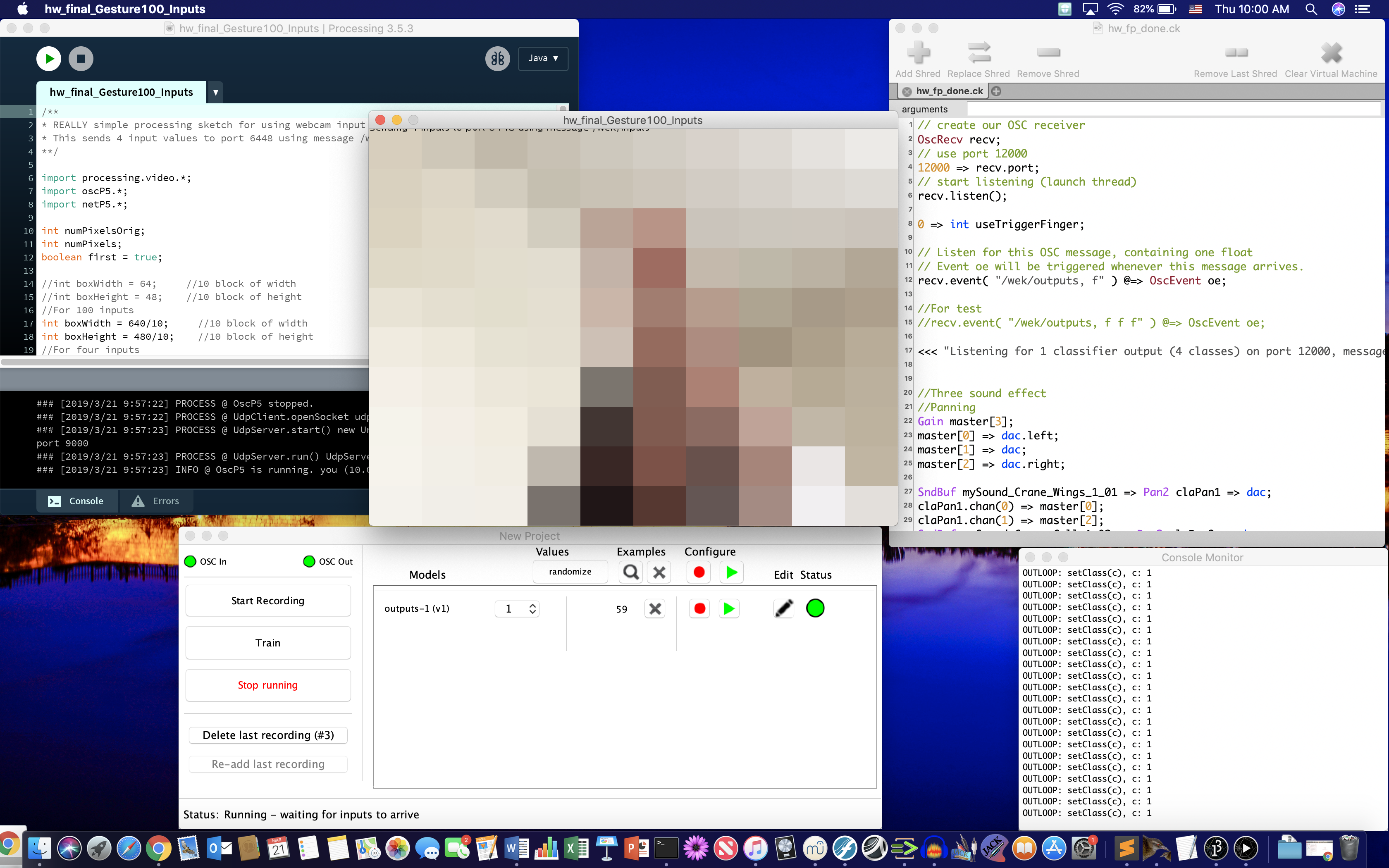

Code-wise, I was able to get the processing-wekinator-chuck pipeline to work! I am equipping webcam tracking and a 4-input grid which maps gestures and their locations to floats. A certain gesture/location maps to floats higher than 0.6, between 0.3 and 0.6, and less than 0.3, this allows for a higher, moderate, and minimal increase in gain, respectively.

I am still fine tuning when I exactly use the gestures and the effects within my piece. Do note that the wav provides only an excerpt - the introduction - of my piece. I am saving the rest for my live performance.

“The Crane” is an effort to embody the ethereal being through audio-visual performance. Written

for solo cello & electronics and with the desire to emulate crane movement, it incorporates

unconventional cello technique and gestural control of the accompaniment through Wekinator.

The piece is a tribute to Korean music, culture, and dance and can be recognized as a distant

variation of the nation’s beloved folk song, “Arirang.” “The Crane” pushes for a self-sustaining

performance with both the human and computer playing equal roles as performers.

In essence, "The Crane" revolves around two systems.

The first system is relatively static in which the cello continuously feeds into ChucK as adc input. The code incorporates an appropriate reverb and echo to give the piece its light and translucent touch.

The second system is a means to call in accompanying sounds through crane-like gestures. The accompanying sounds include crane calls and the fluttering of wings. I equip the Processing-Wekinator-ChucK pipeline which allows me to call in the accompanying sounds through learned gestures. Through Processing, I use my webcam and a 10x10 detection grid to process input. Through machine learning software Wekinator, I am able to designate gestures to locations, in which these locations become classifiers. Then, in ChucK, I map specific gesture locations to specific wav files. The wav files are panned.