|

|

|

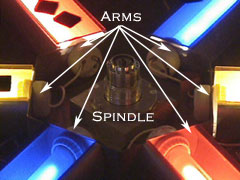

MUSICAL CONTROLLERS While on tour for her latest work entitled "Happiness", Laurie Anderson was kind enough to stop by Stanford's Center for Computer Research in Music and Acoustics (CCRMA) for an informal discussion on music, creativity, and the creation of new electronic musical instruments. Thanks to the work of Max Matthews and Bill Verplank, CCRMA has developed a strong series of classes devoted to the conceptualization, design, and creation of new electronic musical instruments. While there, Laurie spent time visiting with students and experimenting with their final projects for the class. Above, she can be seen playing the Circular Optical Object Locator, a collaborative music-making device designed by David Merrill, Jocelyn Robert, and myself. In addition to being a part of the Stanford Community Day festivities, the COOL was accepted for presentation at the second annual conference on New Interfaces for Musical Expression held at MIT's Media Lab Europe in Dublin, Ireland. (Read our paper) Purpose The COOL was conceived as a collaborative and cooperative music-making device that was essentially non-virtuosic. In other words, you don't need much musical sophistication to play it. It is made of a large, transparent, acrylic disc that can be rotated by hand above a radial array of six colored lights. Each of the six lights represents an independent sound or instrument, so in a sense, the light table instrument is made up of six sub-instruments. Performers stand around the table with handfuls of opaque squares which they place wherever they wish on the table as it spins. The placement of each object determines the pitch of the resulting note. For example, objects placed near the center of the disc might be interpreted as low notes, and as pieces are placed further and further away from the center the pitch might rise. As the disc carries the opaque squares in a circle over the lights, they obstruct the light shining from below. Hanging above the table is a simple computer video camera which looks down on the scene, and through a process described below, converts the images into sound. In addition to being able to control the pitch of the instrument, the 'mode' in which the instrument plays can be changed at will by using two control panels, one on either side of the table. Each control panel is made up of 3 light dimmers, and three mode buttons. Each of the six light dimmers controls the brightness of one of the six lights. Changes in brightness result in a corresponding change in the volume of the sound associated with that particular light. Pressing one of the six mode buttons, serves to change two things simultaneously. The first is the key in which the instrument is playing. The second change is that the instrument or sound associated with each of the lights is changed. So for example, Mode 1 might result in piano and harpsichord and violin sounds being used, whereas Mode 2 might result in electronic and synthesized sounds. So by using the mode buttons creatively, performers can change the timbre and character of the music they create. Construction It uses an inexpensive digital video camera to observe a rotating acrylic disc. The disc is allowed to rotate because it has been mounted on a hard drive spindle.  The spindle is welded onto a six sided steel frame to which are mounted 6, 12" long incandescant lights. Opaque objects placed on the disc are detected by the camera during rotation because they obstruct the light. Using Microsoft's Vision SDK, David and I designed an image processing algorithm that detects when an object is obstructing the light. The software then determines the location of the obstruction and passes its coordinates (via ethernet) to a second computer. The second computer uses those coordinates to trigger the playback of synthesized sounds. For example, yello light #1 may represent the sound of a piano. If an opaque object passes over yello light #1 and through the section of the light that has been chosen to represent the note "middle C", then the software synthesizes the sound of a piano playing the note middle C, and plays it through the speakers. In this way, the locations of the objects passing under the camera are used to generate music. Another way to think of it is that we have arbitrarily chosen to map specific locations on the table to specific notes in the scale.  An example of the way locations are mapped to notes by the software. David and I constructed the steel hub (that anchors the hard drive spindle and each of the six lights) using the metal cutting and welding facilities at Stanford's Product Realization Lab (PRL). The PRL is a wonderful facility that gives any Stanford student access to a huge range of metal and wood working tools.

|