This project is a mobile version of an audiovisual granular synthesis instrument that I built as my final project for Music 256a at CCRMA. The inspiration behind the original version along with early concept sketches and design details are presented here . While the laptop version provides a large canvas and a great deal of RAM for audio files, the iPad's multitouch capabilities offer a much richer interactive experience. I extended the instrument to take advantage of these resources and added a social/networking component to allow users to share their "scenes" and download the work of others.

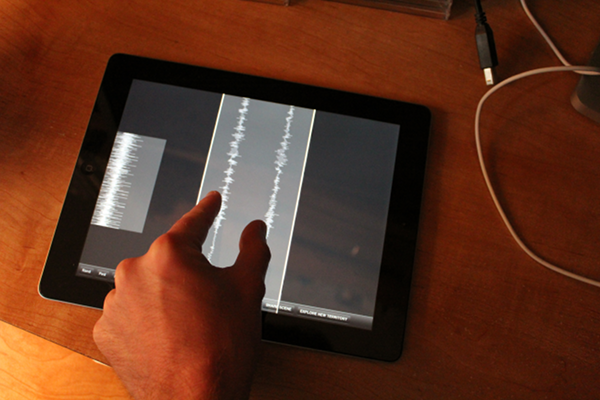

Users begin by loading a number of short sound files into an iTunes playlist called Borderlands. This list must be uploaded to the iPad to be recognized by the software. Upon loading, Borderlands reads all audio from this playlist and stores it in memory. This is the audio that will be granulated by the user.

The audio files can be moved and resized using typical single and double finger gestures. Currently the orientation of each rectangle may be toggled by touching the object with a third finger.

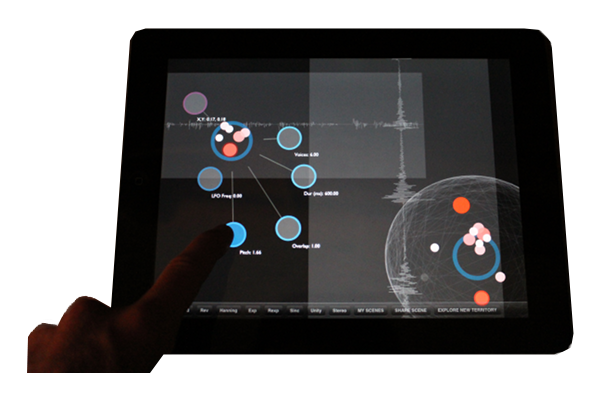

A grain cloud can be created by double tapping anywhere on the interface. This immediately opens the cloud for editing, exposing a number of parameter regions around the cloud. Each region can be dragged or thrown between a hard coded min and max value. Users may edit the number of voices in the cloud, duration of each voice, overlap of the voices, playback rate or "pitch" of the voices, and the frequency of an LFO controlling the pitch of each voice. This view may be hidden by double tapping on the grain cloud again. Several discrete parameters are available as buttons at the bottom of the interface. These include the grain direction (random/forward/backward), the window type (hanning, exponential decay, exponential growth, sinc), and the stereo distribution of the grains (two modes - unity preserves the original spatialization of the audio file, stereo pans grain voices left and right sequentially.

A control over the X/Y randomness of each grain voice is also available and is indicated by a purple cirular region. This parameter is not constrained to a particular value and may be tossed about the screen. It will bounce off the screen boundaries. If the user moves the entire cloud, however, the XY parameter is not constrained to the screen. This is deliberate and allows the user to maintain the random spatial distribution of a cloud with the tradeoff that he or she may have to manipulate the cloud to get the XY control back on the screen.

Should the blue parameter regions move off screen, the user can simply double touch the cloud and rotate them back onto the screen. The cloud turns magenta when this touch is registered. Rotational velocity and damping is incorporated, so the release of the rotation feels natural.

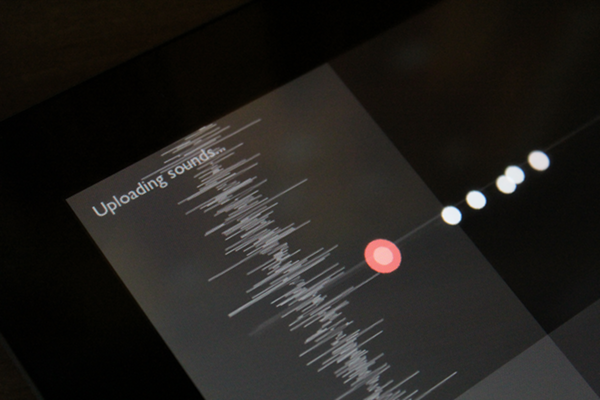

By pressing the "Share Scene" button at the bottom of the screen, the user can upload his or her current configuration to the Borderlands server, sharing sounds and grain clouds with others around the world.

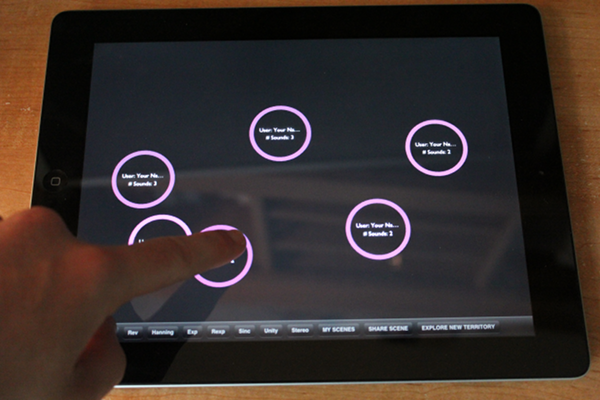

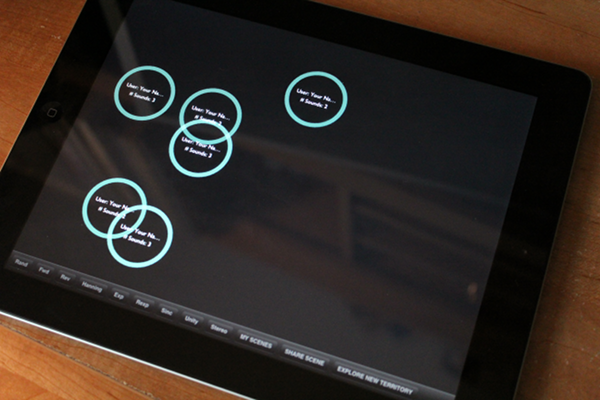

The user can view a random assortment of his/her own previously uploaded scenes by clicking the "My Scenes" button. A series of scene objects will pop up on the screen. These objects display information about the scene, including username, number of sounds contained, etc. When one of these entities is touched, it plays back a windowed five second sample of the set. This sample represents the last five seconds of audio heard before the selected scene was uploaded to the server. These objects can be dragged around and played polyphonically by tapping them.

An interesting scene may be downloaded by double tapping the corresponding object. A big message displays to inform the user that a download is in progress.

Alternatively, the user can view a random assortment of scenes that others have uploaded by clicking "Explore New Territory." These objects are colored differently to distinguish from the "My Sets" area.

The incorporation of the social element provides an opportunity for users to discover new sounds and learn new techniques by observing the work of others.

Much more is in store for this app. Several key features that are in the pipeline include:

Mike Rotondo, Derek Tingle, and Mayank Sanganeria provided very valuable troubleshooting help as I built the server. Ge Wang provided great design guidance throughout the entire process. Thanks guys!

This software was developed by Chris Carlson for Music 256b - Mobile Music at Stanford's Center for Computer Research in Music and Acoustics. Please feel free to contact him at carlsonc AT ccrma.stanford.edu