Difference between revisions of "Strange Convo"

(→Phase II: Designing and Prototyping) |

(→Phase 2: Designing and Prototyping) |

||

| (27 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

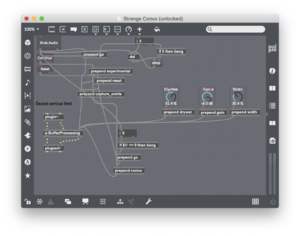

| − | Strange Convo is a Max4Live audio effect that uses pseudo-realtime convolution with a sampling paradigm to dynamically filter an audio signal. It allows an Ableton Live user to sample stereo audio into the device’s buffer which is then convolved with incoming audio. The convolved audio sounds similar to a resonator, as the common frequencies between the sample and the real-time input are amplified and the uncommon frequencies are attenuated. Unlike a resonator, however, the resonant frequencies change with the buffer sample’s timbral qualities through time. Strange Convo is particularly good at smearing two harmonic sources into ambient washes, or applying tonal/chordal qualities to percussive sounds. By encouraging experimentation with different sample sources, Strange Convo pushes users towards creative new applications of convolution cross-synthesis. | + | [[File:Strange_convo_gui.png|200px|thumb|right|Strange Convo M4L GUI.]] |

| + | Strange Convo is a Max4Live audio effect created by Tyler Sadlier that uses pseudo-realtime convolution with a sampling paradigm to dynamically filter an audio signal. It allows an Ableton Live user to sample stereo audio into the device’s buffer which is then convolved with incoming audio. The convolved audio sounds similar to a resonator, as the common frequencies between the sample and the real-time input are amplified and the uncommon frequencies are attenuated. Unlike a resonator, however, the resonant frequencies change with the buffer sample’s timbral qualities through time. Strange Convo is particularly good at smearing two harmonic sources into ambient washes, or applying tonal/chordal qualities to percussive sounds. By encouraging experimentation with different sample sources, Strange Convo pushes users towards creative new applications of convolution cross-synthesis. | ||

| − | [ | + | [https://vimeo.com/307047304 Strange Convo video documentation on Vimeo] |

| + | |||

| + | [https://stanford.box.com/s/8n2sx2zkzg2drm4e1tfhlz0mnytfrrze Strange Convo M4L Patch DL] | ||

== Phase 1: Researching == | == Phase 1: Researching == | ||

| + | |||

| + | I spent weeks 1-3 of the quarter researching potential project paths. I brainstormed several ideas, including an interactive synthesizer tutorial website made with WebAudio, an audio effect plugin that emulates the sound of a laptop mic run through Skype (a modulation, filter, and distortion multieffect), and some sort of unique application of convolution. With each of these potential routes, I looked into the surrounding literature and libraries online. For the WebAudio project, I spent several days experimenting with simple WebAudio features until I found a resource called Tone.js that makes synth and effects creation significantly easier/faster than just WebAudio itself. With my concurrent research into convolution techniques, I realized that I could easily record an impulse played back through Skype (and/or record using a laptop mic) to get a static snapshot of filtering artifacts (but not time-based irregularities). Capturing an IR through Skype could help me further uncover the artifacts' timbres and hopefully emulate the sound through other means. | ||

| + | |||

| + | In my further research of capturing impulse responses and convolution reverb techniques, I found several websites that recommended using non-impulse response sources in convolution reverbs for creative results. Most convolution reverbs utilize a file browser or a drag-and-drop paradigm for loading new impulses. It occurred to me that if a convolution effect did not prioritize using standard impulse responses, the method of loading or capturing the "impulse" could be completely different. It was at this point that I knew I would create a convolution effect with an interface built around sampling. Furthermore, the idea of convolving two real-time audio signals seemed like it would produce incredibly unusual results. I sought to research different applications of audio convolution that could help me with creating my effect. | ||

| + | |||

| + | I decided early on that I wanted to use Max4Live to build this effect. Luckily, I found a set of Max externals developed for a variety of convolution related tasks distributed under a modified BSD license: [http://eprints.hud.ac.uk/id/eprint/14897/ the HISSTools Impulse Response Toolbox.] After inspecting all of their externals in Max, I found that the multiconvolve~ object was most relevant to my design. This object combines "time domain convolution for the early portion of an IR with more efficient FFT-based partitioned convolution for the latter parts of the IR." Because of the multiconvolve~ object's design, it cannot convolve two real-time signals and needs the "IR" to be loaded into a Max buffer~ object. With this in mind, I began focusing exclusively on designing the sampling paradigm. | ||

== Phase 2: Designing and Prototyping == | == Phase 2: Designing and Prototyping == | ||

| + | [[File:early_m4l_structure.png|250px|thumb|right|Early stage design of the Max patch layout.]] | ||

| + | From weeks 4-6, I was in the design and prototyping stage. I began the design process by making a list of the basic features I wanted to implement as well as a second list for feature stretch goals. Starting from the most essential component, I knew that I needed a button for loading audio into the buffer. I also knew that I needed a way to reset the buffer in case undesirable results appeared. I chose to name these buttons Grab Audio and Reset respectively. I also knew I needed a Dry/Wet control to crossfade between the dry incoming signal and the wet convoluted signal. After I knew these three essential design elements, I began using Max to prototype where they would reside in M4L's GUI (without connecting to any underlying patching at this point). I prefer Ableton's standard layout of mixing utility parameters residing in the right corner of devices, so I chose to put the Dry/Wet knob in the bottom right corner. In thinking about standard Live device design, I decided I also needed Gain and Width parameters for my effect. | ||

| + | |||

| + | One I had the externally facing interface more or less designed, I began thinking about how the logic of the internal patcher and subpatchers would flow. To create this audio device, I had to learn both how audio routing in Max works as well as creating Max4Live GUIs. In my previous experiences with building Max patches for use with Ableton Live, I soley used MIDI CC to send parameter changes from my Max patches to pre-existing M4L Utility devices on Ableton tracks. I learned how to create M4L GUI objects that can send parameter data to their relevant objects. Organizing the flow of these parameters into subpatchers was essential to make developing easier for myself. I researched methods of subpatcher organization by opening and deconstructing many of the Max4Live audio effects and instruments that come with Ableton Live. Since I had previously briefly experimented with the audio functionality of multiconvolve~, my next new task was hooking up the GUI buttons to the recording and loading of the buffer~ objects into multiconvolve~. | ||

| + | |||

| + | == Phase 3: Building and Tuning == | ||

| + | [[File:Main_patcher.png|300px|thumb|right|Final version of main Max patcher.]] | ||

| + | From weeks 7-10, I finished developing the final build of Strange Convo and began video documentation. Finishing the M4L device meant fixing bugs with the GUI, adjusting the gain staging between subpatchers, improving legibility of object flow in Max, and tweaking internal parameters for better sounding output. | ||

| + | |||

| + | To prepare for video documentation, I wrote myself a rough script with all fundamental aspects of the audio device. I recorded the introduction portion in my dorm bedroom first. When the time came to record example use of the effect in Ableton, I leaned on my previous experimentations with the effect to show the best and most unique sounding results. I recorded my screen, my microphone, and my Ableton output simultaneously with QuickTime Player. I was actually surprised at how well a built in MacOS utility handled both the screen capture and narration. I sequenced and edited all clips in Final Cut Pro X. | ||

| + | |||

| + | == Reflections / Future Plans == | ||

| + | One of my initial visions for Strange Convo was to convolve two continuous signals in real-time. However, the design of multiconvolve~ and the limitations of Ableton’s audio routing in Max4Live complicate this goal. HISSTools’s multiconvolve~ external object requires the source or "IR” sound to be stored in (a) buffer~ object(s). My “Continuous” button was a fast workaround for implementing pseudo-realtime convolution between two stereo signals, as it continually records into the buffer and replaces previous audio. Since I capped the buffer length for this mode at 500ms, the “source” is delayed by half a second. Adding an online buffer length control to the user interface could make this feature more expressive and useful for different forms of sampled sources. | ||

| − | + | Unfortunately, the utility of this Continuous feature is limited by the fact that Ableton does not easily allow audio to be routed from a separate track to an M4L device. Since Strange Convo's buffer~ can currently only sample from the same track that it resides on, the result is an audio track convolving with itself from 500ms ago. This feature certainly sounds interesting with some sources, but implementing a workaround for streaming audio between tracks would open up many possibilities. Granulator II by Monolake is a granular synthesis Max4Live instrument that can sample audio from other tracks in an Ableton project. To accomplish this, Granulator II uses an “Input” audio effect that stores audio into a global buffer that the Max4Live instrument can load and access. My biggest hope for a new iteration of Strange Convo is to add this multi-device sampling functionality (along with buffer control) to enable convolution with other sources in near real-time. | |

| − | + | Some other parameters I hope to add to Strange Convo in the future are one-knob controls for Compression, High-cut, Low-cut, and Pre-delay of the wet convoluted audio. Gain and Width already provide a surprising amount of control for balancing the presence of the convoluted signal in the mix, but additional effects could make sculpting the sound significantly easier. Interesting offline controls could include an envelope control for the sampled audio, as the transient shape of the buffer significantly changes how the convolution reacts. However, this would necessitate reloading the buffer every time the parameter is changed (as well as copying new buffers from the original buffer). Other fun features I'd like to implement are an oscilloscope, trails mode (to prevent cut offs of old reverb when sampling new source), sample waveform display, grainstretch, and a gate/threshold at which audio is automatically grabbed. | |

Latest revision as of 12:00, 18 December 2018

Contents

Introduction

Strange Convo is a Max4Live audio effect created by Tyler Sadlier that uses pseudo-realtime convolution with a sampling paradigm to dynamically filter an audio signal. It allows an Ableton Live user to sample stereo audio into the device’s buffer which is then convolved with incoming audio. The convolved audio sounds similar to a resonator, as the common frequencies between the sample and the real-time input are amplified and the uncommon frequencies are attenuated. Unlike a resonator, however, the resonant frequencies change with the buffer sample’s timbral qualities through time. Strange Convo is particularly good at smearing two harmonic sources into ambient washes, or applying tonal/chordal qualities to percussive sounds. By encouraging experimentation with different sample sources, Strange Convo pushes users towards creative new applications of convolution cross-synthesis.

Strange Convo video documentation on Vimeo

Phase 1: Researching

I spent weeks 1-3 of the quarter researching potential project paths. I brainstormed several ideas, including an interactive synthesizer tutorial website made with WebAudio, an audio effect plugin that emulates the sound of a laptop mic run through Skype (a modulation, filter, and distortion multieffect), and some sort of unique application of convolution. With each of these potential routes, I looked into the surrounding literature and libraries online. For the WebAudio project, I spent several days experimenting with simple WebAudio features until I found a resource called Tone.js that makes synth and effects creation significantly easier/faster than just WebAudio itself. With my concurrent research into convolution techniques, I realized that I could easily record an impulse played back through Skype (and/or record using a laptop mic) to get a static snapshot of filtering artifacts (but not time-based irregularities). Capturing an IR through Skype could help me further uncover the artifacts' timbres and hopefully emulate the sound through other means.

In my further research of capturing impulse responses and convolution reverb techniques, I found several websites that recommended using non-impulse response sources in convolution reverbs for creative results. Most convolution reverbs utilize a file browser or a drag-and-drop paradigm for loading new impulses. It occurred to me that if a convolution effect did not prioritize using standard impulse responses, the method of loading or capturing the "impulse" could be completely different. It was at this point that I knew I would create a convolution effect with an interface built around sampling. Furthermore, the idea of convolving two real-time audio signals seemed like it would produce incredibly unusual results. I sought to research different applications of audio convolution that could help me with creating my effect.

I decided early on that I wanted to use Max4Live to build this effect. Luckily, I found a set of Max externals developed for a variety of convolution related tasks distributed under a modified BSD license: the HISSTools Impulse Response Toolbox. After inspecting all of their externals in Max, I found that the multiconvolve~ object was most relevant to my design. This object combines "time domain convolution for the early portion of an IR with more efficient FFT-based partitioned convolution for the latter parts of the IR." Because of the multiconvolve~ object's design, it cannot convolve two real-time signals and needs the "IR" to be loaded into a Max buffer~ object. With this in mind, I began focusing exclusively on designing the sampling paradigm.

Phase 2: Designing and Prototyping

From weeks 4-6, I was in the design and prototyping stage. I began the design process by making a list of the basic features I wanted to implement as well as a second list for feature stretch goals. Starting from the most essential component, I knew that I needed a button for loading audio into the buffer. I also knew that I needed a way to reset the buffer in case undesirable results appeared. I chose to name these buttons Grab Audio and Reset respectively. I also knew I needed a Dry/Wet control to crossfade between the dry incoming signal and the wet convoluted signal. After I knew these three essential design elements, I began using Max to prototype where they would reside in M4L's GUI (without connecting to any underlying patching at this point). I prefer Ableton's standard layout of mixing utility parameters residing in the right corner of devices, so I chose to put the Dry/Wet knob in the bottom right corner. In thinking about standard Live device design, I decided I also needed Gain and Width parameters for my effect.

One I had the externally facing interface more or less designed, I began thinking about how the logic of the internal patcher and subpatchers would flow. To create this audio device, I had to learn both how audio routing in Max works as well as creating Max4Live GUIs. In my previous experiences with building Max patches for use with Ableton Live, I soley used MIDI CC to send parameter changes from my Max patches to pre-existing M4L Utility devices on Ableton tracks. I learned how to create M4L GUI objects that can send parameter data to their relevant objects. Organizing the flow of these parameters into subpatchers was essential to make developing easier for myself. I researched methods of subpatcher organization by opening and deconstructing many of the Max4Live audio effects and instruments that come with Ableton Live. Since I had previously briefly experimented with the audio functionality of multiconvolve~, my next new task was hooking up the GUI buttons to the recording and loading of the buffer~ objects into multiconvolve~.

Phase 3: Building and Tuning

From weeks 7-10, I finished developing the final build of Strange Convo and began video documentation. Finishing the M4L device meant fixing bugs with the GUI, adjusting the gain staging between subpatchers, improving legibility of object flow in Max, and tweaking internal parameters for better sounding output.

To prepare for video documentation, I wrote myself a rough script with all fundamental aspects of the audio device. I recorded the introduction portion in my dorm bedroom first. When the time came to record example use of the effect in Ableton, I leaned on my previous experimentations with the effect to show the best and most unique sounding results. I recorded my screen, my microphone, and my Ableton output simultaneously with QuickTime Player. I was actually surprised at how well a built in MacOS utility handled both the screen capture and narration. I sequenced and edited all clips in Final Cut Pro X.

Reflections / Future Plans

One of my initial visions for Strange Convo was to convolve two continuous signals in real-time. However, the design of multiconvolve~ and the limitations of Ableton’s audio routing in Max4Live complicate this goal. HISSTools’s multiconvolve~ external object requires the source or "IR” sound to be stored in (a) buffer~ object(s). My “Continuous” button was a fast workaround for implementing pseudo-realtime convolution between two stereo signals, as it continually records into the buffer and replaces previous audio. Since I capped the buffer length for this mode at 500ms, the “source” is delayed by half a second. Adding an online buffer length control to the user interface could make this feature more expressive and useful for different forms of sampled sources.

Unfortunately, the utility of this Continuous feature is limited by the fact that Ableton does not easily allow audio to be routed from a separate track to an M4L device. Since Strange Convo's buffer~ can currently only sample from the same track that it resides on, the result is an audio track convolving with itself from 500ms ago. This feature certainly sounds interesting with some sources, but implementing a workaround for streaming audio between tracks would open up many possibilities. Granulator II by Monolake is a granular synthesis Max4Live instrument that can sample audio from other tracks in an Ableton project. To accomplish this, Granulator II uses an “Input” audio effect that stores audio into a global buffer that the Max4Live instrument can load and access. My biggest hope for a new iteration of Strange Convo is to add this multi-device sampling functionality (along with buffer control) to enable convolution with other sources in near real-time.

Some other parameters I hope to add to Strange Convo in the future are one-knob controls for Compression, High-cut, Low-cut, and Pre-delay of the wet convoluted audio. Gain and Width already provide a surprising amount of control for balancing the presence of the convoluted signal in the mix, but additional effects could make sculpting the sound significantly easier. Interesting offline controls could include an envelope control for the sampled audio, as the transient shape of the buffer significantly changes how the convolution reacts. However, this would necessitate reloading the buffer every time the parameter is changed (as well as copying new buffers from the original buffer). Other fun features I'd like to implement are an oscilloscope, trails mode (to prevent cut offs of old reverb when sampling new source), sample waveform display, grainstretch, and a gate/threshold at which audio is automatically grabbed.