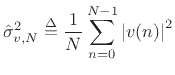

The sample autocorrelation of a sequence ![]() ,

,

![]() may be defined by

may be defined by

In matlab, the sample autocorrelation of a vector x can be computed using the xcorr function.7.3

Example:

octave:1> xcorr([1 1 1 1], 'unbiased') ans = 1 1 1 1 1 1 1The xcorr function also performs cross-correlation when given a second signal argument, and offers additional features with additional arguments. Say help xcorr for details.

Note that

![]() is the average of the lagged product

is the average of the lagged product

![]() over all available data. For white noise, this

average approaches zero for

over all available data. For white noise, this

average approaches zero for ![]() as the number of terms in the

average increases. That is, we must have

as the number of terms in the

average increases. That is, we must have

![$\displaystyle \hat{r}_{v,N}(l) \approx \left\{\begin{array}{ll} \hat{\sigma}_{v,N}^2, & l=0 \\ [5pt] 0, & l\neq 0 \\ \end{array} \right. \isdef \hat{\sigma}_{v,N}^2 \delta(l)$](img1109.png) |

(7.8) |

|

(7.9) |

The plot in the upper left corner of Fig.6.1 shows the sample autocorrelation obtained for 32 samples of pseudorandom numbers (synthetic random numbers). (For reasons to be discussed below, the sample autocorrelation has been multiplied by a Bartlett (triangular) window.) Proceeding down the column on the left, the results of averaging many such sample autocorrelations can be seen. It is clear that the average sample autocorrelation function is approaching an impulse, as desired by definition for white noise. (The right column shows the Fourier transform of each sample autocorrelation function, which is a smoothed estimate of the power spectral density, as discussed in §6.6 below.)

For stationary stochastic processes ![]() , the sample autocorrelation

function

, the sample autocorrelation

function

![]() approaches the true autocorrelation function

approaches the true autocorrelation function

![]() in the limit as the number of observed samples

in the limit as the number of observed samples ![]() goes to

infinity, i.e.,

goes to

infinity, i.e.,

| (7.10) |

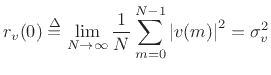

At lag ![]() , the autocorrelation function of a zero-mean random

process

, the autocorrelation function of a zero-mean random

process ![]() reduces to the variance:

reduces to the variance:

The variance can also be called the average power or mean square. The square root