MUS 220C - Research Seminar in Computer Generated Music

Juan-Pablo Caceres

An Electronic Composition Through Intelligent Glitch Control

Project Goal

To develop a composition based on an intelligent glitch effect vst plug-in, which I will develop. The composition will my current music software setup and potentially the LUMI as a control interface. The glitch effect should include various pre-set quantized glitch articulations. The initial glitch plugin will be prototyped in matlab with a final c++ implementation that can be used on any vst host application.

Paper

Download the final paper: Aphasia.pdf

Composition

Download the WAVE Version: Aphasia.wav

Download the MP3 Version: Aphasia.mp3

Timeline

Week 1

Performed initial research into various effects and synthesis tools that can be used as a basis for a piece of music. After reviewing the work of BT and his album, This Binary Universe, I have settled upon a glitch effect loosely based upon the kinds of sounds he was able to attain using his Beat Stutter application.

Week 2

Began developing the initial idea and settled on an achievable development timeline involving two weeks of matlab development, three weeks of vst plug-in development and the remainder of the time will be spent on composing for the effect.

Week 3

Matlab Development: Say Hello to Dr. Glitchensteinzen

Just what the doctor ordered. Dr. Glitchensteinzen is a prototype matlab interface for my glitch plugin development. I wrote an initial matlab function that takes in a waveform and performs simple glitches which can be quickly played back. This initial interface controls glitch spacing and length (in seconds) as well as a parameter for the amount of randomized glitches in the waveform. There are three different glitching schemes right now:

1. Glitch - simply windows out portions of the original waveform

2. Panned Glitch - windows out portions of the original waveform and pans the glitched segments to provide a stereo effect.

3. Retrigger - general retriggering effect that samples a small portion of the waveform based upon the glitch length and spacing and give a stuttering effect that can be panned between stereo speakers or simply played left/right or center.

Week 4

Added matlab script for working with a generic delay line.

% Delayline

%

% function [x] = delayGlitch(sig,fs,delayN,win,rptType,rptSpd)

%

% sig = input signal

% fs = sampling rate

% delayN = length of initial delay and silent spaces

% win = length of glitch window

% rptType= type of increment for read_pointers

% rptSpd = speed of repetition for read_pointers.

This matlab script allows you to feed in a signal to a delay line, window it and modify the read pointers based on pre-specified read curves to playback the glitched wave file.

Week 5

Began work on real-time VST plug-in, Aphasia. Basic VST framework has been set up in XCode and a simple real-time implementation has been established for creating pauses in the real-time input stream based on length and spacing arguments from Dr. Glitchensteinzen.

Week 6

VST code for Aphasia was implemented to provide all the basic functions from Dr. Glitchensteinzen. I have begun to explore various retriggering schemes and ways to implement tempo-based musical articulations in C++.

Week 7

Continued working on setting up my Xcode as my coding environment and learned more about VST programming.

Week 8

VST development continued along with implementation of new coding techniques and a lot of learning about Xcode. I have also documented some important tasks that will be helpful for others when developing these types of projects.

1. Setting up Doxygen in XCode! See my tutorial page here.

2. Setting up Shark for Code Optimization! See my tutorial page here.

Week 9

Timing and quantization was added to the VST development. The most important pieces of timing information I have gathered are BPM, PPQ and Time Signature. Using this info, I can calculate the number of samples required for sample accurate quantization of the glitch effect. Below is my call to gather this data from the VSTTimeInfo struct. More information on this struct can be found here.

/*!-------------------------------------------------------------------------------------------------------------------------------------

void Aphasia::updateTiming()

{

VstTimeInfo* myTime = getTimeInfo ( kVstPpqPosValid | kVstTempoValid | kVstTimeSigValid | kVstBarsValid );

if ( myTime )

{

m_fPPQ = myTime->ppqPos;

m_fBPM = myTime->tempo;

m_nTimeSigNum = myTime->timeSigNumerator;

m_nTimeSigDen = myTime->timeSigDenominator;

m_fBarStartPos = myTime->barStartPos;

m_fSamplePos = myTime->samplePos;

}

}

The above updateTiming() function is called to update my own local variables m_fPPQ, m_fBPM, etc... These variables are then used for sample calculation. In order to quantize the glitch effect, I can simply calculate how many samples I need to wait before the next bar (using PPQ) and implement the following function:

/*!-------------------------------------------------------------------------------------------------------------------------------------

void Retrigger::QuantizeToBar(float* inL, float* inR)

{

if (m_nSampCount<m_nSampsToWait)

{

//output sound

m_pDelayL[m_nWriter]=*inL;

m_pDelayR[m_nWriter]=*inR;

}

else

{

m_nSampCount=0;

m_bQuantize=false;

}

}

In addition to this, I added functionality for Retriggering as opposed to simple silence insertion or gating. This will be used in my articulated glitch effects next week.

Week 10

Finalized VST Development:

Composed a short piece to display some of the sonic possibilities with the plug-in in its current state. Feel free to download the audio below:

Download the WAVE Version: Aphasia.wav

Download the MP3 Version: Aphasia.mp3

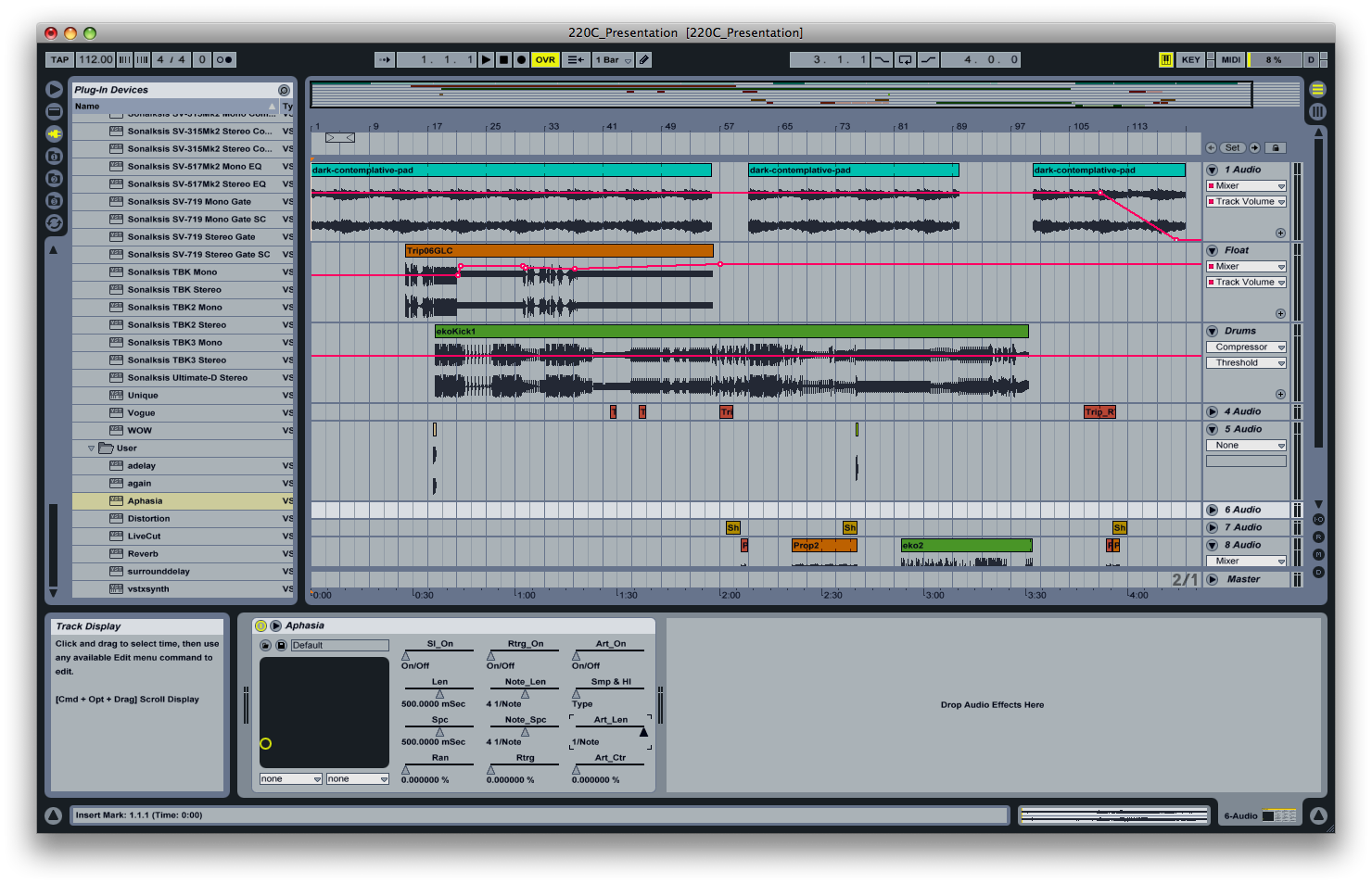

This piece was composed using only raw, royalty-free audio samples and loops in Ableton Live. See a picture of the workspace below: