|

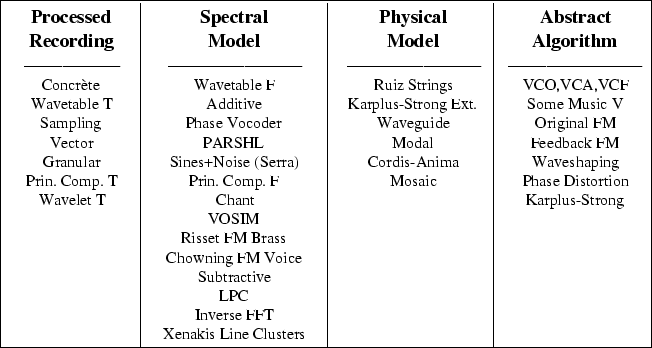

The previous historical sketch focused more on synthesis engines than on techniques used to synthesize sound. In this section, we organize today's best-known synthesis techniques into the categories displayed in Fig. 2.

Some of these techniques will now be briefly discussed. Space limitations prevent detailed discussions and references for all techniques. The reader is referred to [16,14,15] and recent issues of the Computer Music Journal for further reading and references.

Sampling synthesis can be considered a descendant of the tape-based musique concrète. Jean-Claude Risset noted: ``Musique concrète did open an infinite world of sounds for music, but the control and manipulation one could exert upon them was rudimentary with respect to the richness of the sounds, which favored an esthetics of collage'' [13]. The same criticism can be applied to sampling synthesizers three decades later. A recorded sound can be transformed into any other sound by a linear transformation (some linear, time-varying filter). A loss of generality is therefore not inherent in the sampling approach. To date, however, highly general transformations of recorded material have not yet been introduced into the synthesis repertoire, except in a few disconnected research efforts. A major problem with sampling synthesizers, which try so hard to imitate existing instruments, is their lack of what we might call ``prosodic rules'' for musical phrasing. Individual notes may sound like realistic reproductions of traditional instrument tones. but when these tones are played in sequence, all of the note-to-note transitions--so important in instruments such as saxophones and the human voice--are missing. Speech scientists recognized long ago that juxtaposing phonemes made for brittle speech. Today's samplers make brittle, frozen music.

Derivative techniques such as granular synthesis are yielding significant new colors for the sonic palette. It can be argued also that spectral-modeling and wavelet-based synthesis are sampling methods with powerful transformation capabilities in the frequency domain.

``Wavetable T'' denotes time-domain wavetable synthesis; this is the classic technique in which an arbitrary waveshape stored in memory is repeatedly read to create a periodic sound. The original Music V oscillator supported this synthesis type, and to approximate a real (periodic) instrument tone, one could snip out a period of a recorded sound and load the table with it. The oscillator output is invariably multiplied by an amplitude envelope. Of course, we quickly discovered that we also needed vibrato, and it often helped to add several wavetable units together with independent vibrato and slightly detuned fundamental frequencies in order to obtain a chorus-like effect. Cross-fading between wavetables was a convenient way to obtain an evolving timbre.

More than anything else, wavetable synthesis taught us that ``periodic'' sounds are generally poor sounds. Exact repetition is rarely musical. Electronic organs (the first digital one being the Allen organ) added tremolo, vibrato, and the Leslie (multipath delay and Doppler-shifting via spinning loudspeakers) as sonic post-processing in order to escape ear-fatiguing, periodic sounds.

``Wavetable F'' denotes wavetable synthesis again, but as approached from the frequency domain. In this case, a desired harmonic spectrum is created (either a priori or from the results of a spectrum analysis) and an inverse Fourier series creates the period for the table. This approach, with interpolation among timbres, was used by Michael McNabb in the creation of Dreamsong [11]. It was used years earlier in psychoacoustics research by John Grey. An advantage of spectral-based wavetable synthesis is that phase is readily normalized, making interpolation between different wavetable timbres smoother and more predictable.

Vector synthesis is essentially multiple-wavetable synthesis with interpolation (and more recently, chaining of wavetables). This technique, with four-way interpolation, is used in the Korg Wavestation, for example. It points out a way that sampling synthesis can be made sensitive to an arbitrary number of performance control parameters. That is, given a sufficient number of wavetables as well as means for chaining, enveloping, and forming arbitrary linear combinations (interpolations) among them, it is possible to provide any number of expressive control parameters. Sampling synthesis need not be restricted to static table playback with looping and post-filtering. In principle, many wavetables may be necessary along each parameter dimension, and it is difficult in general to orthogonalize the dimensions of control. In any case, a great deal of memory is needed to make a multidimensional timbre space using tables. Perhaps a physical model is worth a thousand wavetables.

Principal-components synthesis was apparently first tried in the time domain by Stapleton and Bass at Purdue University [19]. The ``principal components'' corresponding to a set of sounds to be synthesized are ``most important waveshapes,'' which can be mixed to provide an approximation to all sounds in the desired set. Principal components are the eigenvectors corresponding to the largest eigenvalues of the covariance matrix formed as a sum of outer products of the waveforms in the desired set. Stapleton and Bass computed an optimal set of single periods for approximating a larger set of periodic musical tones via linear combinations. This would be a valuable complement to vector synthesis since it can provide smooth vectors between a variety of natural sounds. The frequency-domain form was laid out in [12] in the context of steady-state tone discrimination based on changes in harmonic amplitudes. In this domain, the principal components are fundamental spectral shapes that are mixed together to produce various spectra. This provides analysis support for spectral interpolation synthesis techniques [4].

Additive synthesis historically models a spectrum as a set of discrete ``lines'' corresponding to sinusoids. The first analysis-driven additive synthesis for music appears to be Jean-Claude Risset's analysis and resynthesis of trumpet tones using Music V in 1964 [13]. He also appears to have carried out the first piecewise-linear reduction of the harmonic amplitude envelopes, a technique that has become standard in additive synthesis based on oscillator banks. The phase vocoder, developed by Flanagan at Bell Laboratories and adapted to music applications by Andy Moorer and others, has provided analysis support for additive synthesis for many years. The PARSHL program, written in the summer of 1984 at CCRMA, extended the phase vocoder to inharmonic partials, motivated initially by the piano. Xavier Serra, for his CCRMA Ph.D. thesis research, added filtered noise to the inharmonic sinusoidal model [17]. Macaulay and Quatieri at MIT Lincoln Labs independently developed a variant of the tracking phase vocoder for speech coding applications, and since have extended additive synthesis to a wider variety of audio signal processing applications. Inverse-FFT additive synthesis is implemented by writing any desired spectrum into an array and using the FFT algorithm to synthesize each frame of the time waveform (Chamberlin 1980); it undoubtedly has a big future in spectral-modeling synthesis since it is so general. The only tricky part is writing the spectrum for each frame in such a way that the frames splice together noiselessly in the time domain. The frame-splicing problem is avoided when using a bank of sinusoidal oscillators because, for steady-state tones, the phase can be allowed to run free with only the amplitude and frequency controls changing over time; similarly, the frame-splicing problem is avoided when using a noise generator passing through a time-varying filter, as in the Serra sines+noise model.

Linear Predictive Coding (LPC) has been used successfully for music synthesis by Andy Moorer, Ken Steiglitz, Paul Lansky, and earlier (at lower sampling rates) by speech researchers at Bell Telephone Laboratories. It is listed as a spectral modeling technique because there is evidence that the reason for the success of LPC in sound synthesis has more to do with the fact that the upper spectral envelope is estimated by the LPC algorithm than the fact that it has an interpretation as an estimator for the parameters of an all-pole model for the vocal tract. If this is so, direct spectral modeling should be able to do anything LPC can do, and more, and with greater flexibility. LPC has proven valuable for estimating loop-filter coefficients in waveguide models of strings and instrument bodies, so it could also be entered as a tool for sampling loop-filters in the ``Physical Model'' column. As a synthesis technique, it has the same transient-smearing problem that spectral modeling based on the short-time Fourier transform has. LPC can be viewed as one of many possible nonlinear smoothings of the short-time power spectrum, with good audio properties.

The Chant vocal synthesis technique [2] is listed a spectral modeling technique because it is a variation on formant synthesis. The Klatt speech synthesizer is another example. VOSIM is similar in concept, but trades sound quality for lower computational cost. Chant uses five exponentially decaying sinusoids tuned to the formant frequencies, prewindowed and repeated (overlapped) at the pitch frequency. Developing good Chant voices begins with a sung-vowel spectrum. The formants are measured, and Chant parameters are set to provide good approximations to these formants. Thus, the object of Chant is to model the spectrum as a regular sequence of harmonics multiplied by a formant envelope. LPC and subtractive synthesis also take this point of view, except that the excitation can be white noise rather than a pulse train (i.e., any flat ``excitation'' spectrum will do). In more recent years, Chant has been extended to support noise-modulated harmonics--especially useful in the higher frequency regions. The problem is that real voices are not perfectly periodic, particularly when glottal closure is not complete, and higher-frequency harmonics look more like narrowband noise than spectral lines. Thus, a good spectral model should include provision for spectral lines that are somewhat ``fuzzy.'' There are many ways to accomplish this ``air brush'' effect on the spectrum. Bill Schottstaedt, many years ago, added a little noise to the output of a modulating FM oscillator to achieve this effect on a formant group. Additive synthesis based on oscillators can accept a noise input in the same way, or any low-frequency amplitude- or phase-modulation can be used. Inverse-FFT synthesizers can simply write a broader ``hill'' into the spectrum instead of a discrete line (as in the sampled window transform); the phase along the hill controls its shape and spread in the time domain. In the LPC world, it has been achieved, in effect, by multipulse excitation--that is, the ``buzzy'' impulse train is replaced by a small ``tuft'' of impulses, once per period. Multipulse LPC sounds more natural than single-pulse LPC. The Karplus-Strong algorithm is listed as an abstract algorithm because it was conceived as a wavetable technique with a means of modifying the table each time through. It was later recognized to be a special case of physical models for strings developed by McIntyre and Woodhouse, which led to its extensions for musical use.

What the Karplus-Strong algorithm showed, to everyone's surprise, was that a physical model for a real vibrating string could be simplified to a multiply-free, two-point average with musically useful results. Waveguide synthesis is a set of extensions in the direction of accurate physical modeling while maintaining the computational simplicity reminiscent of the Karplus-Strong algorithm. It most efficiently models one-dimensional waveguides, such as strings and bores, yet it can be coupled in a rigorous way to the more general physical models in Cordis-Anima and Mosaic [3,18,1].